Last Updated on July 20, 2023

With the explosion in popularity of generative AI in general and ChatGPT in particular, prompting has become an increasingly important skill for those in the world of AI. Crafting a prompt, the mechanism of interacting with a large language model (LLM) such as ChatGPT, is not the simple syntactic undertaking it would first appear to be. After the novelty of the first interchanges with ChatGPT, it became clear that practice and consideration would be needed for prompt mastery. As a result, developing processes to create the most useful prompts possible – known as prompt engineering – has become a coveted expertise in LLM circles and beyond.

In this post, you will learn about prompt engineering. In particular,

- How to provide information in the prompt for the greatest impact on the responses

- What are personas, positive and negative prompts, zero-shot prompts, and so on

- How to use prompts iteratively to leverage the conversational nature of ChatGPT

Get started and apply ChatGPT with my book Maximizing Productivity with ChatGPT. It provides real-world use cases and prompt examples designed to get you using ChatGPT quickly.

Let’s get started.

Prompt Engineering for Effective Interaction with ChatGPT

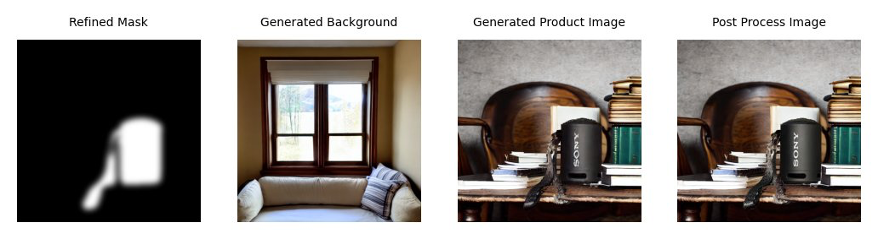

Picture generated by Adrian Tam using Stable Diffusion. Some rights reserved.

Overview

This post is divided into three parts; they are

- Principles of Prompting

- Basic Prompt Engineering

- Advanced Prompt Engineering Strategies

- Collaborative Power Tips

Principles of Prompting

Prompt engineering is the most crucial aspect of utilizing LLMs effectively and is a powerful tool for customizing the interactions with ChatGPT. It involves crafting clear and specific instructions or queries to elicit the desired responses from the language model. By carefully constructing prompts, users can guide ChatGPT’s output toward their intended goals and ensure more accurate and useful responses.

There are several foundational techniques to remember in the process of prompt optimization for ChatGPT.

First, providing explicit instructions at the beginning of the prompt helps set the context and define the task for the model. Specifying the format or type of the expected answer is also beneficial. Additionally, you can enhance the interaction by incorporating system messages or role-playing techniques in the prompt.

Below is an example prompt with the above techniques:

I would like you to generate 10 quick-prep dinner meal ideas for recipe blogs, with each idea including a title and a one sentence description of the meal. These blogs will be written for an audience of parents looking for easy-to-prepare family meals. Output the results as a bulleted list.

Compare that prompt with the following:

Write 10 recipe blogs.

Intuitively, the former will garner more useful results.

Remember that you can improve the quality and relevance of the model’s responses by iteratively refining and experiment with prompts, creating more productive conversations. Don’t be afraid to test out potential prompts on ChatGPT directly.

Basic Prompt Engineering

Now that you know what a basic prompt should look like, let’s dive into some basic prompt engineering considerations in more detail.

Prompt Wording

A prompt’s wording is paramount, as it guides the LLM in generating the desired output. It’s important to phrase the question or statement in a way that ChatGPT understands and can respond to accurately.

For example, if a user is not an expert in an area and does not know the right term to phrase a question, ChatGPT may experience limitations on the answers they provide. It is similar to searching on the web without knowing the correct keyword.

While it may be evident that additional information could be used to create better prompts, it may be less obvious that being overly verbose in general is not necessarily an optimal strategy. It’s best to think of prompt wording not as a separate technique but as a connecting thread through all other techniques.

Succinctness

Succinctness in a prompt is important for clarity and precision. A well-crafted prompt should be concise and to the point, providing enough information for ChatGPT to understand the user’s intent without being overly verbose. However, ensuring the prompt is not too brief is vital, which may lead to ambiguity or misunderstanding. This balance between not enough and too much can be tricky to strike. Practice is probably the best way to master this skill.

Wording and succinctness in the prompt are important because it is for specificity.

Roles and Goals

In prompt engineering, roles are personas assigned for the LLM and the intended audience. For example, if one is interested in having ChatGPT write an outline for a blog post on machine learning classification metrics, explicitly stating that the LLM is to act as an expert machine learning practitioner and that its intended audience is data science newcomers would certainly help provide a fruitful response. Whether this should be stated in a conversational language (“You are to act as a real estate agent with 10 years experience in the Phoenix area“) or in a more formal manner (“Author: expert Phoenix real estate agent; Audience: inexperienced home buyers“) can be experimented within a given scenario.

Goals are intimately connected to roles. Explicitly stating the goal of a prompt-guided interaction is not only a good idea but also necessary. Without it, how would ChatGPT have any inkling of what output to generate?

Below is a competent prompt that has considered both roles and goals:

You are to act as a real estate agent with 10 years experience in the Phoenix area. Your goal is to produce a one paragraph summary of each of the top 5 family neighborhoods in the Phoenix metropolitan area. The intended audience is inexperienced home buyers.

Along with the explicitly stated roles and goals, note the relative specificity of the example prompt above.

Positive and Negative Prompting

Positive and negative prompting is another set of framing methods to guide the model’s output. Positive prompts (“do this“) encourage the model to include specific types of output and generate certain types of responses. Negative prompts (“don’t do this“), on the other hand, discourage the model from including specific types of output and generating certain types of responses. Using positive and negative prompts can greatly influence the direction and quality of the model’s output.

Consider the following example prompt:

You are to act as a real estate agent with 10 years experience in the Phoenix area. Your goal is to produce a one paragraph summary of each of the top 5 family neighborhoods in the Phoenix metropolitan area. The intended audience is inexperienced home buyers.

The framing of the above prompt is positive in nature, providing guidance on what ChatGPT should generate. Let’s add some wording to discourage certain output, be it in content or format. An example of negative prompting geared toward content guidance could be the addition of the following to our above example:

Do not include any neighborhoods within 5 miles of downtown or adjacent to the airport.

This additional constraint should be helpful in ChatGPT’s understanding of what output it should generate.

Advanced Prompt Engineering Strategies

Let’s look at a selection of more advanced prompt engineering strategies. While the previous section provided some general guidelines for interactions with LLMs, you can turn to a variety of contemporary strategies that are commonplace in the prompt engineer’s toolkit for the ability to interact with ChatGPT in a more complex manner.

Input/Output Prompting

The input/output prompting strategy involves defining the input that the user provides to the LLM and the output that the LLM is to generate in response. This strategy is fundamental to prompt engineering as it directly influences the quality and relevance of the ChatGPT’s response.

For instance, the user might provide an input prompt asking ChatGPT to generate a Python script for a specific task, and the desired output would be the generated script.

Below is an example of the most basic strategy: provide a single input and desire a single output.

Generate a Python script that takes a single mandatory command line argument ([project]) and performs the following tasks:

– creates a new folder named [project]

– creates a file within the new folder named [project].py

– writes a simple Python script file header to the [project].py file

Zero-Shot Prompting

The zero-shot strategy involves the LLM generating an answer without any examples or context. This strategy can be useful when the user wants a quick answer without providing additional detail, or when the topic is so general that examples would artificially limit the response. For example:

Generate 10 possible names for my new dog.

One-Shot Prompting

The one-shot strategy involves the LLM generating an answer based on a single example or piece of context provided by the user. This strategy can guide ChatGPT’s response and ensure it aligns with the user’s intent. The idea here would be that one example would provide more guidance to the model than none. For example:

Generate 10 possible names for my new dog.

A dog name that I like is Banana.

Few-Shot Prompting

The few-shot strategy involves the LLM generating an answer based on a few examples or pieces of context provided by the user. This strategy can guide ChatGPT’s response and ensure it aligns with the user’s intent. The idea here would be that several examples would provide more guidance to the model than one. For example:

Generate 10 possible names for my new dog.

Dog names that I like include:

– Banana

– Kiwi

– Pineapple

– Coconut

As you can guess, the more examples the prompt included, the closer the generated output conforms to what is desired. With zero-shot, there may be no fruit names suggested; with one-shot, there may be several; and with few-shot, the suggestions may consist entirely of fruit-themed names.

Chain-of-Thought Prompting

The chain-of-thought strategy involves providing the LLM with a few examples that help to refine the original question and ensure a more accurate and comprehensive answer. Chain-of-thought prompts are so-called because they include a few chain-of-thought examples in the prompting. It is differentiated from the X-shot prompting techniques as chain-of-thought prompts are structured to encourage critical thinking, and are designed to help uncover new insights or approaches that ChatGPT may not have otherwise considered.

The technique also encourages the LLM to output its critical reasoning. The calling card of chain-of-thought prompting is the phrase “Let’s think step by step” which is generally appended to the end of a prompt, which research suggests is enough to improve generated results.

Consider the following one-shot prompt example:

Q: Joe has 20 eggs. He buys 2 more cartons of eggs. Each carton contains 12 eggs. How many eggs does Joe have now?

A: The answer is 44.Q: Farmer John had 93 chickens. If he sold 20 to Farmer Bill and bought twice that number more, how many chickens does Farmer John have now?

And consider ChatGPT’s incorrect output response:

The answer is 73.

Now let’s look at the following chain of thought prompt, which is written to encourage critical thinking by the LLM:

Q: Joe has 20 eggs. He buys 2 more cartons of eggs. Each carton contains 12 eggs. How many eggs does Joe have now? Let’s think step by step.

A: Joe started with 20 eggs. 2 cartons of 12 eggs is 24 eggs. 20 + 24 = 44. Therefore, Joe has 44 eggs, and the answer is 44.Q: Farmer John had 93 chickens. If he sold 20 to Farmer Bill and bought twice that number more, how many chickens does Farmer John have now? Let’s think step by step.

ChatGPT’s response is different this time:

A: Farmer John sold 20 chickens to Farmer Bill, so he was left with 93 – 20 = 73 chickens. He then bought twice the number of chickens he sold, so he bought 2 * 20 = 40 chickens. Adding these newly bought chickens to his remaining stick, Farmer John now has 73 + 40 = 113 chickens.

By providing an example of the critical thinking process needed to solve this problem, and coaching it to think step by step, ChatGPT could apply the same reasoning to our question and come up with the right answer this time.

Self-Criticism

The self-criticism strategy involves prompting the LLM to assess its output for potential inaccuracies or improvement areas. This strategy can ensure the information provided by ChatGPT is as accurate as possible. It can aid users in debugging their prompts and determining why they are not getting results that meet expectations.

An example of such a prompt is as follows:

Please re-read your above response. Do you see any issues or mistakes with your response? If so, please identify these issues or mistakes and make the necessary edits.

This example pertains to coaching ChatGPT to use self-criticism for debugging its buggy code:

Look at the code you have just generated. Currently it does not run. Are you able to see any syntax errors or flow control mistakes that you are able to rectify? If so, please identify the section of problematic code and re-generate it.

Harnessing ChatGPT’s self-healing abilities is a prompt engineering technique you must not overlook.

Iterative

The iterative or expansive strategy involves prompting the LLM with follow-up prompts based on the output of an initial prompt. This involves iterating on the results by asking further questions or making additional requests from each successive response.

For example, consider having ChatGPT assist in creating an outline for a book you are writing. The first prompt could like this:

I am writing a book on time travel theories. I have not settled on a specific topic. Generate 5 specific topic suggestions for such a book. For each suggestion, provide a title and one paragraph of description of what the book would cover. The book will be aimed at casual readers.

Now, suppose one of the suggested topics is as follows:

Title: “Temporal Paradoxes: Navigating the Complexities of Time Travel”

Description: “Temporal Paradoxes” delves into the mind-bending intricacies of time travel paradoxes, exploring the fascinating conundrums they present. This book explores various paradoxes such as the Bootstrap Paradox, the Predestination Paradox, and the Information Paradox, among others. …

You could then iterate on this using a follow-up prompt:

I will write the book you suggested, “Temporal Paradoxes: Navigating the Complexities of Time Travel”. Generate a chapter outline for this book, including sections and subsections.

A possible output excerpt is below:

Introduction

– Defining temporal paradoxes

– Significance of temporal paradoxes in time travel narratives

– Overview of the chapter structureChapter 1: The Bootstrap Paradox

1.1 The essence of the Bootstrap Paradox

– Explaining the concept and its origin

– Key examples from literature, film, and real-life anecdotes

…

This iteration can then continue. Further prompting could be:

Let’s flesh out the introduction. Generate a two paragraph summary of what each of the chapter’s sections will cover. The chapter outline for the introduction is below.

– – –

Introduction

– Defining temporal paradoxes

– Significance of temporal paradoxes in time travel narratives

– Overview of the chapter structure

You could see how further iteration could continue in this example. A similar iteration could be used to have ChatGPT generate code: starting with an overview, iterating on the overview to generate modules, generating on the modules to generate functions, and finally generate the code within functions. Just as breaking big problems into smaller, more manageable problems is generally a prescription for success with humans, ChatGPT excels at completing larger tasks in more approachable chunks.

Collaborative Power Tips

The best way to look at ChatGPT is as a junior assistant, be that a research assistant, coding assistant, problem-solving assistant, or whatever assistant you require. Recognizing and fostering this collaborative atmosphere can lead to further success. Here are a few quick tips for promoting this collaboration.

Prompting for Prompts

One way to improve your prompt crafting is to get ChatGPT involved. A prompt like this could lead to beneficial results:

What prompt could I use right now to further help you in this task?

ChatGPT should then generate suggestions for useful prompts that you could use on it to strengthen its further responses.

Model-Guided Prompting

Model-guided prompting involves instructing the LLM to prompt you for the information needed to complete a requested task. This is similar to telling someone, “ask me what you need to know.”

I would like you to write a Python program to manage my client information, which is stored in a Google Sheet. Please ask me whatever questions you need answers to in order to undertake this assignment.

Leaving it to ChatGPT to decide what information it needs to perform a task can benefit, as it takes out some guesswork and discourages hallucination. Of course, a poorly crafted prompt for model-guided prompting could leave you fielding a number of unrelated questions from ChatGPT, so the initial prompt still needs to be thoughtfully written.

Conclusion

Once you become comfortable with the prompt engineering strategies laid out here, you can search out other more complex, high-performance approaches. Some of these strategies include tree of thoughts, reflexion, and self-consistency, among others. Additional strategies are being developed regularly; no doubt that between the time this was written and when you are reading it, there have been some interesting developments on this front.

Remember, the point of prompt engineering is to convey your intentions and desires to ChatGPT in a way that can be clearly and unambiguously understood by the LLM, so that it can act upon the request in such a way that produces the desired output as closely as possible. If you keep this in mind, move forward with the presented strategies, and hone your prompt engineering skills through regular practice, you will find ChatGPT to be a genuinely useful junior assistant, willing and able to help whenever you need it.

Just so long as you ask it properly.