At Apple, we believe privacy is a fundamental human right. Our work to protect user privacy is informed by a set of privacy principles, and one of those principles is to prioritize using on-device processing. By performing computations locally on a user’s device, we help minimize the amount of data that is shared with Apple or other entities. Of course, a user may request on-device experiences powered by machine learning (ML) that can be enriched by looking up global knowledge hosted on servers. To uphold our commitment to privacy while delivering these experiences, we have implemented a combination of technologies to help ensure these server lookups are private, efficient, and scalable.

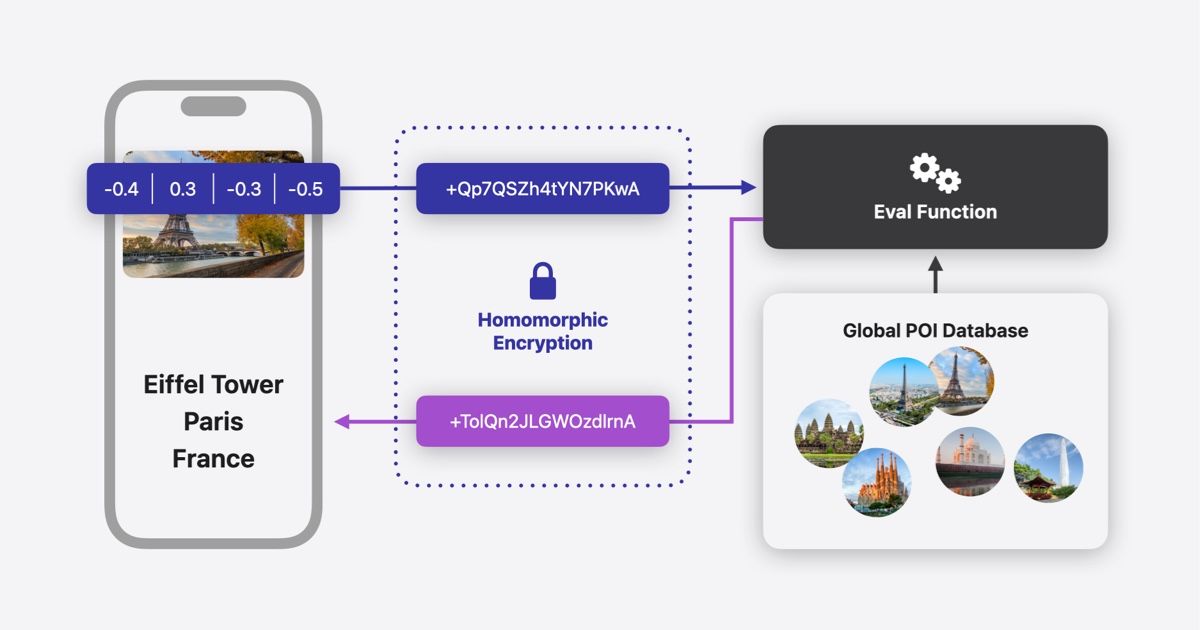

One of the key technologies we use to do this is homomorphic encryption (HE), a form of cryptography that enables computation on encrypted data (see Figure 1). HE is designed so that a client device encrypts a query before sending it to a server, and the server operates on the encrypted query and generates an encrypted response, which the client then decrypts. The server does not decrypt the original request or even have access to the decryption key, so HE is designed to keep the client query private throughout the process.

At Apple, we use HE in conjunction with other privacy-preserving technologies to enable a variety of features, including private database lookups and ML. We also use a number of optimizations and techniques to balance the computational overhead of HE with the latency and efficiency demands of production applications at scale. In this article, we’re sharing an overview of how we use HE along with technologies like private information retrieval (PIR) and private nearest neighbor search (PNNS), as well as a detailed look at how we combine these and other privacy-preserving techniques in production to power Enhanced Visual Search for Photos while protecting user privacy (see Figure 2).

Introducing HE into the Apple ecosystem provides the privacy protections that make it possible for us to enrich on-device experiences with private server look-ups, and to make it easier for the developer community to similarly adopt HE for their own applications, we have open-sourced swift-homomorphic-encryption, an HE library. See this post for more information.

Apple’s Implementation of Homomorphic Encryption

Our implementation of HE needs to allow operations common to ML workflows to run efficiently at scale, while achieving an extremely high level of security. We have implemented the Brakerski–Fan-Vercauteren (BFV) HE scheme, which supports homomorphic operations that are well suited for computation (such as dot products or cosine similarity) on embedding vectors that are common to ML workflows. We use BFV parameters that achieve post-quantum 128-bit security, meaning they provide strong security against both classical and potential future quantum attacks (previously explained in this post).

HE excels in settings where a client needs to look up information on a server while keeping the lookup computation encrypted. We first show how HE alone enables privacy preserving server look up for exact matches with private information retrieval (PIR), and then we describe how it can serve more complex applications with ML when combining approximate matches with private nearest neighbor search (PNNS).

Private Information Retrieval (PIR)

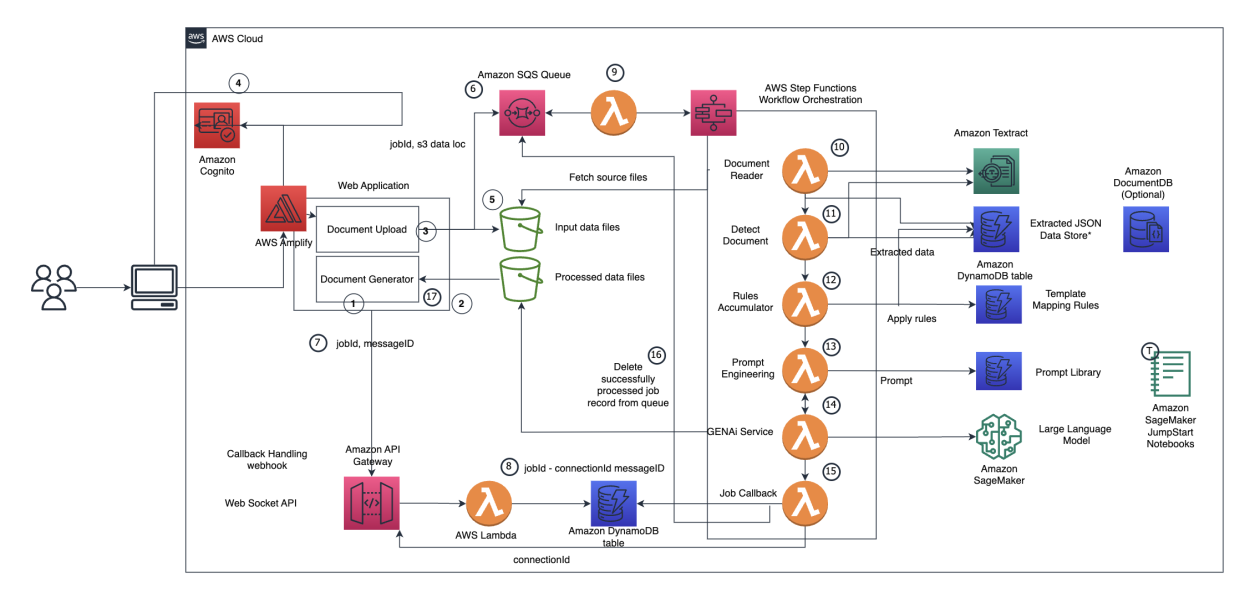

A number of use-cases require a device to privately retrieve an exact match to a query from a server database, such as retrieving the appropriate business logo and information to display with a received email (a feature coming to the Mail app in iOS 18 later this year), providing caller ID information on an incoming phone call, or checking if a URL has been classified as adult content (as is done when a parent has set content restrictions for their child’s iPhone or iPad (see Figure 3). To protect privacy, the relevant information should be retrieved without revealing the query itself, for example in these cases the business that emailed the user, the phone number that called the user, or the URL that is being checked.

Figure 2A: Features using Private Information Retrieval – Caller ID.

Figure 2B: Features using Private Information Retrieval – Mail.

Figure 2C: Features using Private Information Retrieval – Website filtering.

For these workflows, we use private information retrieval (PIR), a form of private keyword-value database lookup. With this process, a client has a private keyword and seeks to retrieve the associated value from a server, without downloading the entire database. To keep the keyword private the client encrypts its keyword before sending it to the server. The server performs HE computation between the incoming ciphertext and its database, and sends the resulting encrypted value back to the requesting device, which decrypts it to learn the value associated with the keyword. Throughout this process, the server does not learn the client’s private keyword or the retrieved result, as it operates on the client’s ciphertext. For example, in the case of web content filtering, the URL is encrypted and sent to the server. The server performs encrypted computation on the ciphertext with URLs in its database, the output of which is also a ciphertext. This encrypted result is sent down to the device, where it is decrypted to identify if the website should be blocked as per the parental restriction controls.

Private Nearest Neighbor Search (PNNS)

For use-cases that require an approximate match, we use Apple’s private nearest neighbor search (PNNS), an efficient private database retrieval process for approximate matching on vector embeddings, described in the paper Scalable Private Search with Wally. With PNNS, the client encrypts a vector embedding and sends the resulting ciphertext as a query to the server. The server performs HE computation to conduct a nearest neighbor search and sends the resulting encrypted values back to the requesting device, which decrypts to learn the nearest neighbor to its query embedding. Similar to PIR, throughout this process, the server does not learn the client’s private embedding or the retrieved results, as it operates on the client’s ciphertext.

By using techniques like PIR and PNNS in combination with HE and other technologies, we are able to build on-device experiences that leverage information from large server-side databases, while protecting user privacy.

Implementing These Techniques in Production

Enhanced Visual Search for photos, which allows a user to search their photo library for specific locations, like landmarks and points of interest, is an illustrative example of a useful feature powered by combining ML with HE and private server lookups. Using PNNS, a user’s device privately queries a global index of popular landmarks and points of interest maintained by Apple to find approximate matches for places depicted in their photo library. Users can configure this feature on their device, using: Settings → Photos → Enhanced Visual Search.

The process starts with an on-device ML model that analyzes a given photo to determine if there is a “region of interest” (ROI) that may contain a landmark. If the model detects an ROI in the “landmark” domain, a vector embedding is calculated for that region of the image. The dimension and precision of the embedding affects the size of the encrypted request sent to the server, the HE computation demands and the response size, so to meet the latency and cost requirements of large-scale production services, the embedding is quantized to 8-bit precision before being encrypted.

The server database to which the client will send its request is divided into disjointed subdivisions, or shards, of embedding clusters. This helps reduce the computational overhead and increase the efficiency of the query, because the server can focus the HE computation on just the relevant portion of the database. A precomputed cluster codebook containing the centroids for the cluster shards is available on the user’s device. This enables the client to locally run a similarity search to identify the closest shard for the embedding, which is added to the encrypted query and sent to the server.

Identifying the database shard relevant to the query could reveal sensitive information about the query itself, so we use differential privacy (DP) with

iCloud Private Relay as an anonymization network. With DP, the client issues fake queries alongside its real ones, so the server cannot tell which are genuine. The queries are also routed through the anonymization network to ensure the server can’t link multiple requests to the same client. For running PNNS for Enhanced Visual Search, our system ensures strong privacy parameters for each user’s photo library i.e. (ε, δ)-DP, with ε = 0.8 , δ = 10-6. For more details, see Scalable Private Search with Wally.

The fleet of servers that handle these queries leverage Apple’s existing ML infrastructure, including a vector database of global landmark image embeddings, expressed as an inverted index. The server identifies the relevant shard based on the index in the client query and uses HE to compute the embedding similarity in this encrypted space. The encrypted scores and set of corresponding metadata (such as landmark names) for candidate landmarks are then returned to the client.

To optimize the efficiency of server-client communications, all similarity scores are merged into one ciphertext of a specified response size.

The client decrypts the reply to its PNNS query, which may contain multiple candidate landmarks. A specialized, lightweight on-device reranking model then predicts the best candidate by using high-level multimodal feature descriptors, including visual similarity scores; locally stored geo-signals; popularity; and index coverage of landmarks (to debias candidate overweighting). When the model has identified the match, the photo’s local metadata is updated with the landmark label, and the user can easily find the photo when searching their device for the landmark’s name.

Conclusion

As shown in this article, Apple is using HE to uphold our commitment to protecting user privacy, while building on-device experiences enriched with information privately looked up from server databases. By implementing HE with a combination of privacy-preserving technologies like PIR and PNNS, on-device and server-side ML models, and other privacy preserving techniques, we are able to deliver features like Enhanced Visual Search, without revealing to the server any information about a user’s on-device content and activity. Introducing HE to the Apple ecosystem has been central to enabling this, and can also help to provide valuable global knowledge to inform on-device ML models while preserving user privacy. With the recently open sourced library swift-homomorphic-encryption, developers can now similarly build on-device experiences that leverage server-side data while protecting user privacy.