The emerging field of Styled Handwritten Text Generation (HTG) seeks to create handwritten text images that replicate the unique calligraphic style of individual writers. This research area has diverse practical applications, from generating high-quality training data for personalized Handwritten Text Recognition (HTR) models to automatically generating handwritten notes for individuals with physical impairments. Additionally, the distinct style representations acquired from models designed for this purpose can find utility in other tasks like writer identification, signature verification, and manipulation of handwriting styles.

When delving into styled handwriting generation, only relying on style transfer proves limiting. This is because emulating the calligraphy of a particular writer extends beyond mere texture considerations, such as the color and texture of the background and ink. It encompasses intricate details like stroke thickness, slant, skew, roundness, individual character shapes, and ligatures. Precise handling of these visual elements is crucial to prevent artifacts that could inadvertently alter the content, such as introducing small extra or missing strokes.

In response to this, specialized methodologies have been devised for HTG. One approach involves treating handwriting as a trajectory composed of individual strokes. Alternatively, it can be approached as an image that captures its visual characteristics.

The former set of techniques employs online HTG strategies, where the prediction of pen trajectory is carried out point by point. On the other hand, the latter set constitutes offline HTG models that directly generate complete textual images. The work presented in this article focuses on the offline HTG paradigm due to its advantageous attributes. Unlike the online approach, it does not necessitate expensive pen-recording training data. As a result, it can be applied even in scenarios where information about an author’s online handwriting is unavailable, such as historical data. Moreover, the offline paradigm is easier to train, as it avoids issues like vanishing gradients and allows for parallelization.

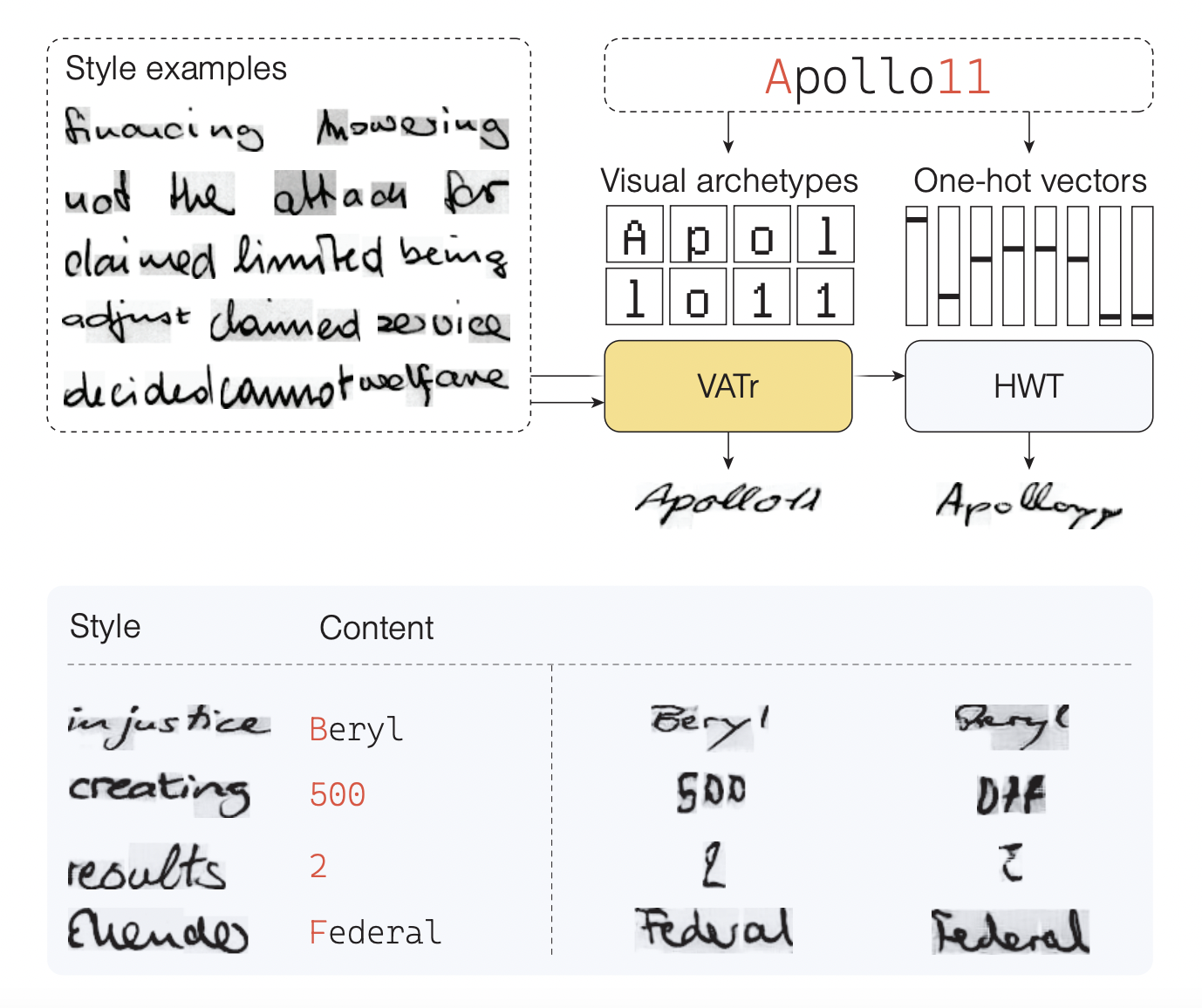

The architecture employed in this study, known as VATr (Visual Archetypes-based Transformer), introduces a novel and innovative approach to Few-Shot-styled offline Handwritten Text Generation (HTG). An overview of the proposed technique is presented in the figure below.

This approach stands out by representing characters as continuous variables and utilizing them as query content vectors within a Transformer decoder for the generation process. The process begins with character representation. Characters are transformed into continuous variables, which are then used as queries within a Transformer decoder. This decoder is a crucial component responsible for generating stylized text images based on the provided content.

A notable advantage of this methodology is its ability to facilitate the generation of characters that are less frequently encountered in the training data, such as numbers, capital letters, and punctuation marks. This is achieved by capitalizing on the proximity in the latent space between rare symbols and more commonly occurring ones.

The architecture employs the GNU Unifont font to render characters as 16×16 binary images, effectively capturing the visual essence of each character. A dense encoding of these character images is then learned and incorporated into the Transformer decoder as queries. These queries guide the decoder’s attention to the style vectors, which are extracted by a pre-trained Transformer encoder.

Furthermore, the approach benefits from a pre-trained backbone, which has been initially trained on an extensive synthetic dataset tailored to emphasize calligraphic style attributes. While this technique is often disregarded in the context of HTG, its effectiveness is demonstrated in yielding robust style representations, particularly for styles that have not been seen before.

The VATr architecture is validated through extensive experimental comparisons against recent state-of-the-art generative methods. Some outcomes and comparisons with state-of-the-art approaches are reported here below.

This was the summary of VATr, a novel AI framework for handwritten text generation from visual archetypes. If you are interested and want to learn more about it, please feel free to refer to the links cited below.

Check out the Paper and GitHub. All Credit For This Research Goes To the Researchers on This Project. Also, don’t forget to join our 28k+ ML SubReddit, 40k+ Facebook Community, Discord Channel, and Email Newsletter, where we share the latest AI research news, cool AI projects, and more.

Daniele Lorenzi received his M.Sc. in ICT for Internet and Multimedia Engineering in 2021 from the University of Padua, Italy. He is a Ph.D. candidate at the Institute of Information Technology (ITEC) at the Alpen-Adria-Universität (AAU) Klagenfurt. He is currently working in the Christian Doppler Laboratory ATHENA and his research interests include adaptive video streaming, immersive media, machine learning, and QoS/QoE evaluation.