This post is co-written with Jayadeep Pabbisetty, Sr. Specialist Data Engineering at Merck, and Prabakaran Mathaiyan, Sr. ML Engineer at Tiger Analytics.

The large machine learning (ML) model development lifecycle requires a scalable model release process similar to that of software development. Model developers often work together in developing ML models and require a robust MLOps platform to work in. A scalable MLOps platform needs to include a process for handling the workflow of ML model registry, approval, and promotion to the next environment level (development, test, UAT, or production).

A model developer typically starts to work in an individual ML development environment within Amazon SageMaker. When a model is trained and ready to be used, it needs to be approved after being registered in the Amazon SageMaker Model Registry. In this post, we discuss how the AWS AI/ML team collaborated with the Merck Human Health IT MLOps team to build a solution that uses an automated workflow for ML model approval and promotion with human intervention in the middle.

Overview of solution

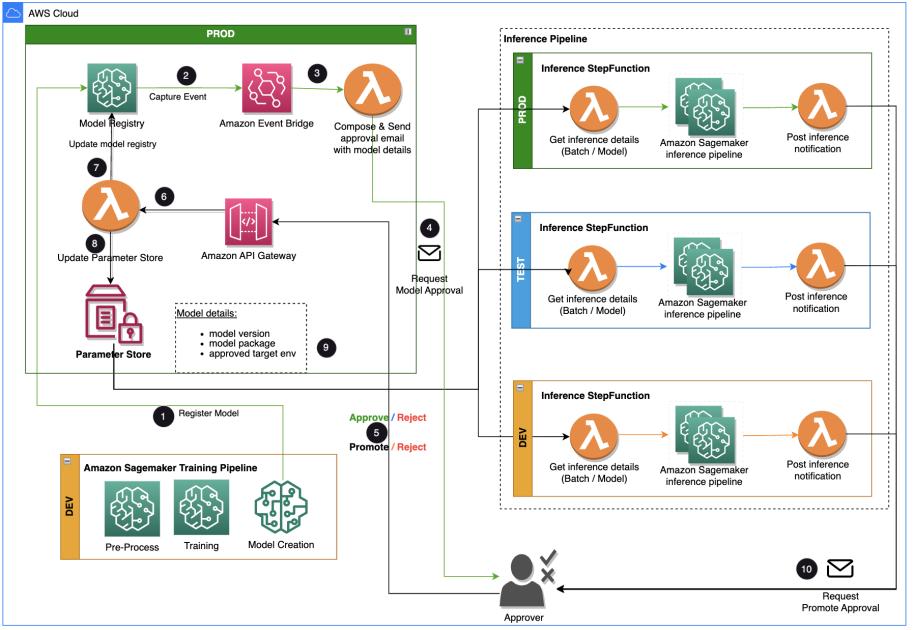

This post focuses on a workflow solution that the ML model development lifecycle can use between the training pipeline and inferencing pipeline. The solution provides a scalable workflow for MLOps in supporting the ML model approval and promotion process with human intervention. An ML model registered by a data scientist needs an approver to review and approve before it is used for an inference pipeline and in the next environment level (test, UAT, or production). The solution uses AWS Lambda, Amazon API Gateway, Amazon EventBridge, and SageMaker to automate the workflow with human approval intervention in the middle. The following architecture diagram shows the overall system design, the AWS services used, and the workflow for approving and promoting ML models with human intervention from development to production.

The workflow includes the following steps:

- The training pipeline develops and registers a model in the SageMaker model registry. At this point, the model status is

PendingManualApproval. - EventBridge monitors status change events to automatically take actions with simple rules.

- The EventBridge model registration event rule invokes a Lambda function that constructs an email with a link to approve or reject the registered model.

- The approver gets an email with the link to review and approve or reject the model.

- The approver approves the model by following the link in the email to an API Gateway endpoint.

- API Gateway invokes a Lambda function to initiate model updates.

- The model registry is updated for the model status (

Approvedfor the dev environment, butPendingManualApprovalfor test, UAT, and production). - The model detail is stored in AWS Parameter Store, a capability of AWS Systems Manager, including the model version, approved target environment, model package.

- The inference pipeline fetches the model approved for the target environment from Parameter Store.

- The post-inference notification Lambda function collects batch inference metrics and sends an email to the approver to promote the model to the next environment.

Prerequisites

The workflow in this post assumes the environment for the training pipeline is set up in SageMaker, along with other resources. The input to the training pipeline is the features dataset. The feature generation details are not included in this post, but it focuses on the registry, approval, and promotion of ML models after they are trained. The model is registered in the model registry and is governed by a monitoring framework in Amazon SageMaker Model Monitor to detect for any drift and proceed to retraining in case of model drift.

Workflow details

The approval workflow starts with a model developed from a training pipeline. When data scientists develop a model, they register it to the SageMaker Model Registry with the model status of PendingManualApproval. EventBridge monitors SageMaker for the model registration event and triggers an event rule that invokes a Lambda function. The Lambda function dynamically constructs an email for an approval of the model with a link to an API Gateway endpoint to another Lambda function. When the approver follows the link to approve the model, API Gateway forwards the approval action to the Lambda function, which updates the SageMaker Model Registry and the model attributes in Parameter Store. The approver must be authenticated and part of the approver group managed by Active Directory. The initial approval marks the model as Approved for dev but PendingManualApproval for test, UAT, and production. The model attributes saved in Parameter Store include the model version, model package, and approved target environment.

When an inference pipeline needs to fetch a model, it checks Parameter Store for the latest model version approved for the target environment and gets the inference details. When the inference pipeline is complete, a post-inference notification email is sent to a stakeholder requesting an approval to promote the model to the next environment level. The email has the details about the model and metrics as well as an approval link to an API Gateway endpoint for a Lambda function that updates the model attributes.

The following is the sequence of events and implementation steps for the ML model approval/promotion workflow from model creation to production. The model is promoted from development to test, UAT, and production environments with an explicit human approval in each step.

We start with the training pipeline, which is ready for model development. The model version starts as 0 in SageMaker Model Registry.

- The SageMaker training pipeline develops and registers a model in SageMaker Model Registry. Model version 1 is registered and starts with Pending Manual Approval status.

The Model Registry metadata has four custom fields for the environments:

The Model Registry metadata has four custom fields for the environments: dev, test, uat, andprod.

- EventBridge monitors the SageMaker Model Registry for the status change to automatically take action with simple rules.

- The model registration event rule invokes a Lambda function that constructs an email with the link to approve or reject the registered model.

- The approver gets an email with the link to review and approve (or reject) the model.

- The approver approves the model by following the link to the API Gateway endpoint in the email.

- API Gateway invokes the Lambda function to initiate model updates.

- The SageMaker Model Registry is updated with the model status.

- The model detail information is stored in Parameter Store, including the model version, approved target environment, and model package.

- The inference pipeline fetches the model approved for the target environment from Parameter Store.

- The post-inference notification Lambda function collects batch inference metrics and sends an email to the approver to promote the model to the next environment.

- The approver approves the model promotion to the next level by following the link to the API Gateway endpoint, which triggers the Lambda function to update the SageMaker Model Registry and Parameter Store.

The complete history of the model versioning and approval is saved for review in Parameter Store.

Conclusion

The large ML model development lifecycle requires a scalable ML model approval process. In this post, we shared an implementation of an ML model registry, approval, and promotion workflow with human intervention using SageMaker Model Registry, EventBridge, API Gateway, and Lambda. If you are considering a scalable ML model development process for your MLOps platform, you can follow the steps in this post to implement a similar workflow.

About the authors

Tom Kim is a Senior Solution Architect at AWS, where he helps his customers achieve their business objectives by developing solutions on AWS. He has extensive experience in enterprise systems architecture and operations across several industries – particularly in Health Care and Life Science. Tom is always learning new technologies that lead to desired business outcome for customers – e.g. AI/ML, GenAI and Data Analytics. He also enjoys traveling to new places and playing new golf courses whenever he can find time.

Tom Kim is a Senior Solution Architect at AWS, where he helps his customers achieve their business objectives by developing solutions on AWS. He has extensive experience in enterprise systems architecture and operations across several industries – particularly in Health Care and Life Science. Tom is always learning new technologies that lead to desired business outcome for customers – e.g. AI/ML, GenAI and Data Analytics. He also enjoys traveling to new places and playing new golf courses whenever he can find time.

Shamika Ariyawansa, serving as a Senior AI/ML Solutions Architect in the Healthcare and Life Sciences division at Amazon Web Services (AWS),specializes in Generative AI, with a focus on Large Language Model (LLM) training, inference optimizations, and MLOps (Machine Learning Operations). He guides customers in embedding advanced Generative AI into their projects, ensuring robust training processes, efficient inference mechanisms, and streamlined MLOps practices for effective and scalable AI solutions. Beyond his professional commitments, Shamika passionately pursues skiing and off-roading adventures.

Shamika Ariyawansa, serving as a Senior AI/ML Solutions Architect in the Healthcare and Life Sciences division at Amazon Web Services (AWS),specializes in Generative AI, with a focus on Large Language Model (LLM) training, inference optimizations, and MLOps (Machine Learning Operations). He guides customers in embedding advanced Generative AI into their projects, ensuring robust training processes, efficient inference mechanisms, and streamlined MLOps practices for effective and scalable AI solutions. Beyond his professional commitments, Shamika passionately pursues skiing and off-roading adventures.

Jayadeep Pabbisetty is a Senior ML/Data Engineer at Merck, where he designs and develops ETL and MLOps solutions to unlock data science and analytics for the business. He is always enthusiastic about learning new technologies, exploring new avenues, and acquiring the skills necessary to evolve with the ever-changing IT industry. In his spare time, he follows his passion for sports and likes to travel and explore new places.

Jayadeep Pabbisetty is a Senior ML/Data Engineer at Merck, where he designs and develops ETL and MLOps solutions to unlock data science and analytics for the business. He is always enthusiastic about learning new technologies, exploring new avenues, and acquiring the skills necessary to evolve with the ever-changing IT industry. In his spare time, he follows his passion for sports and likes to travel and explore new places.

Prabakaran Mathaiyan is a Senior Machine Learning Engineer at Tiger Analytics LLC, where he helps his customers to achieve their business objectives by providing solutions for the model building, training, validation, monitoring, CICD and improvement of machine learning solutions on AWS. Prabakaran is always learning new technologies that lead to desired business outcome for customers – e.g. AI/ML, GenAI, GPT and LLM. He also enjoys playing cricket whenever he can find time.

Prabakaran Mathaiyan is a Senior Machine Learning Engineer at Tiger Analytics LLC, where he helps his customers to achieve their business objectives by providing solutions for the model building, training, validation, monitoring, CICD and improvement of machine learning solutions on AWS. Prabakaran is always learning new technologies that lead to desired business outcome for customers – e.g. AI/ML, GenAI, GPT and LLM. He also enjoys playing cricket whenever he can find time.