Creative advertising has the potential to be revolutionized by generative AI (GenAI). You can now create a wide variation of novel images, such as product shots, by retraining a GenAI model and providing a few inputs into the model, such as textual prompts (sentences describing the scene and objects to be produced by the model). This technique has shown promising results starting in 2022 with the explosion of a new class of foundation models (FMs) called latent diffusion models such as Stable Diffusion, Midjourney, and Dall-E-2. However, to use these models in production, the generation process requires constant refining to generate consistent outputs. This often means creating a large number of sample images of the product and clever prompt engineering, which makes the task difficult at scale.

In this post, we explore how this transformative technology can be harnessed to generate captivating and innovative advertisements at scale, especially when dealing with large catalogs of images. By using the power of GenAI, specifically through the technique of inpainting, we can seamlessly create image backgrounds, resulting in visually stunning and engaging content and reducing unwanted image artifacts (termed model hallucinations). We also delve into the practical implementation of this technique by utilizing Amazon SageMaker endpoints, which enable efficient deployment of the GenAI models driving this creative process.

We use inpainting as the key technique within GenAI-based image generation because it offers a powerful solution for replacing missing elements in images. However, this presents certain challenges. For instance, precise control over the positioning of objects within the image can be limited, leading to potential issues such as image artifacts, floating objects, or unblended boundaries, as shown in the following example images.

To overcome this, we propose in this post to strike a balance between creative freedom and efficient production by generating a multitude of realistic images using minimal supervision. To scale the proposed solution for production and streamline the deployment of AI models in the AWS environment, we demonstrate it using SageMaker endpoints.

In particular, we propose to split the inpainting process as a set of layers, each one potentially with a different set of prompts. The process can be summarized as the following steps:

- First, we prompt for a general scene (for example, “park with trees in the back”) and randomly place the object on that background.

- Next, we add a layer in the lower mid-section of the object by prompting where the object lies (for example, “picnic on grass, or wooden table”).

- Finally, we add a layer similar to the background layer on the upper mid-section of the object using the same prompt as the background.

The benefit of this process is the improvement in the realism of the object because it’s perceived with better scaling and positioning relative to the background environment that matches with human expectations. The following figure shows the steps of the proposed solution.

Solution overview

To accomplish the tasks, the following flow of the data is considered:

- Segment Anything Model (SAM) and Stable Diffusion Inpainting models are hosted in SageMaker endpoints.

- A background prompt is used to create a generated background image using the Stable Diffusion model

- A base product image is passed through SAM to generate a mask. The inverse of the mask is called the anti-mask.

- The generated background image, mask, along with foreground prompts and negative prompts are used as input to the Stable Diffusion Inpainting model to generate a generated intermediate background image.

- Similarly, the generated background image, anti-mask, along with foreground prompts and negative prompts are used as input to the Stable Diffusion Inpainting model to generate a generated intermediate foreground image.

- The final output of the generated product image is obtained by combining the generated intermediate foreground image and generated intermediate background image.

Prerequisites

We have developed an AWS CloudFormation template that will create the SageMaker notebooks used to deploy the endpoints and run inference.

You will need an AWS account with AWS Identity and Access Management (IAM) roles that provides access to the following:

- AWS CloudFormation

- SageMaker

- Although SageMaker endpoints provide instances to run ML models, in order to run heavy workloads like generative AI models, we use the GPU-enabled SageMaker endpoints. Refer to Amazon SageMaker Pricing for more information about pricing.

- We use the NVIDIA A10G-enabled instance

ml.g5.2xlargeto host the models.

- Amazon Simple Storage Service (Amazon S3)

For more details, check out the GitHub repository and the CloudFormation template.

Mask the area of interest of the product

In general, we need to provide an image of the object that we want to place and a mask delineating the contour of the object. This can be done using tools such as Amazon SageMaker Ground Truth. Alternatively, we can automatically segment the object using AI tools such as Segment Anything Models (SAM), assuming that the object is in the center of the image.

Use SAM to generate a mask

With SAM, an advanced generative AI technique, we can effortlessly generate high-quality masks for various objects within images. SAM uses deep learning models trained on extensive datasets to accurately identify and segment objects of interest, providing precise boundaries and pixel-level masks. This breakthrough technology revolutionizes image processing workflows by automating the time-consuming and labor-intensive task of manually creating masks. With SAM, businesses and individuals can now rapidly generate masks for object recognition, image editing, computer vision tasks, and more, unlocking a world of possibilities for visual analysis and manipulation.

Host the SAM model on a SageMaker endpoint

We use the notebook 1_HostGenAIModels.ipynb to create SageMaker endpoints and host the SAM model.

We use the inference code in inference_sam.py and package that into a code.tar.gz file, which we use to create the SageMaker endpoint. The code downloads the SAM model, hosts it on an endpoint, and provides an entry point to run inference and generate output:

SAM_ENDPOINT_NAME = 'sam-pytorch-' + str(datetime.utcnow().strftime('{29fe85292aceb8cf4c6c5bf484e3bcf0e26120073821381a5855b08e43d3ac09}Y-{29fe85292aceb8cf4c6c5bf484e3bcf0e26120073821381a5855b08e43d3ac09}m-{29fe85292aceb8cf4c6c5bf484e3bcf0e26120073821381a5855b08e43d3ac09}d-{29fe85292aceb8cf4c6c5bf484e3bcf0e26120073821381a5855b08e43d3ac09}H-{29fe85292aceb8cf4c6c5bf484e3bcf0e26120073821381a5855b08e43d3ac09}M-{29fe85292aceb8cf4c6c5bf484e3bcf0e26120073821381a5855b08e43d3ac09}S-{29fe85292aceb8cf4c6c5bf484e3bcf0e26120073821381a5855b08e43d3ac09}f'))

prefix_sam = "SAM/demo-custom-endpoint"

model_data_sam = s3.S3Uploader.upload("code.tar.gz", f's3://{bucket}/{prefix_sam}')

model_sam = PyTorchModel(entry_point="inference_sam.py",

model_data=model_data_sam,

framework_version='1.12',

py_version='py38',

role=role,

env={'TS_MAX_RESPONSE_SIZE':'2000000000', 'SAGEMAKER_MODEL_SERVER_TIMEOUT' : '300'},

sagemaker_session=sess,

name="model-"+SAM_ENDPOINT_NAME)

predictor_sam = model_sam.deploy(initial_instance_count=1,

instance_type=INSTANCE_TYPE,

deserializers=JSONDeserializer(),

endpoint_name=SAM_ENDPOINT_NAME)Invoke the SAM model and generate a mask

The following code is part of the 2_GenerateInPaintingImages.ipynb notebook, which is used to run the endpoints and generate results:

raw_image = Image.open("images/speaker.png").convert("RGB")

predictor_sam = PyTorchPredictor(endpoint_name=SAM_ENDPOINT_NAME,

deserializer=JSONDeserializer())

output_array = predictor_sam.predict(raw_image, initial_args={'Accept': 'application/json'})

mask_image = Image.fromarray(np.array(output_array).astype(np.uint8))

# save the mask image using PIL Image

mask_image.save('images/speaker_mask.png')The following figure shows the resulting mask obtained from the product image.

Use inpainting to create a generated image

By combining the power of inpainting with the mask generated by SAM and the user’s prompt, we can create remarkable generated images. Inpainting utilizes advanced generative AI techniques to intelligently fill in the missing or masked regions of an image, seamlessly blending them with the surrounding content. With the SAM-generated mask as guidance and the user’s prompt as a creative input, inpainting algorithms can generate visually coherent and contextually appropriate content, resulting in stunning and personalized images. This fusion of technologies opens up endless creative possibilities, allowing users to transform their visions into vivid, captivating visual narratives.

Host a Stable Diffusion Inpainting model on a SageMaker endpoint

Similarly to 2.1, we use the notebook 1_HostGenAIModels.ipynb to create SageMaker endpoints and host the Stable Diffusion Inpainting model.

We use the inference code in inference_inpainting.py and package that into a code.tar.gz file, which we use to create the SageMaker endpoint. The code downloads the Stable Diffusion Inpainting model, hosts it on an endpoint, and provides an entry point to run inference and generate output:

INPAINTING_ENDPOINT_NAME = 'inpainting-pytorch-' + str(datetime.utcnow().strftime('{29fe85292aceb8cf4c6c5bf484e3bcf0e26120073821381a5855b08e43d3ac09}Y-{29fe85292aceb8cf4c6c5bf484e3bcf0e26120073821381a5855b08e43d3ac09}m-{29fe85292aceb8cf4c6c5bf484e3bcf0e26120073821381a5855b08e43d3ac09}d-{29fe85292aceb8cf4c6c5bf484e3bcf0e26120073821381a5855b08e43d3ac09}H-{29fe85292aceb8cf4c6c5bf484e3bcf0e26120073821381a5855b08e43d3ac09}M-{29fe85292aceb8cf4c6c5bf484e3bcf0e26120073821381a5855b08e43d3ac09}S-{29fe85292aceb8cf4c6c5bf484e3bcf0e26120073821381a5855b08e43d3ac09}f'))

prefix_inpainting = "InPainting/demo-custom-endpoint"

model_data_inpainting = s3.S3Uploader.upload("code.tar.gz", f"s3://{bucket}/{prefix_inpainting}")

model_inpainting = PyTorchModel(entry_point="inference_inpainting.py",

model_data=model_data_inpainting,

framework_version='1.12',

py_version='py38',

role=role,

env={'TS_MAX_RESPONSE_SIZE':'2000000000', 'SAGEMAKER_MODEL_SERVER_TIMEOUT' : '300'},

sagemaker_session=sess,

name="model-"+INPAINTING_ENDPOINT_NAME)

predictor_inpainting = model_inpainting.deploy(initial_instance_count=1,

instance_type=INSTANCE_TYPE,

serializer=JSONSerializer(),

deserializers=JSONDeserializer(),

endpoint_name=INPAINTING_ENDPOINT_NAME,

volume_size=128)Invoke the Stable Diffusion Inpainting model and generate a new image

Similarly to the step to invoke the SAM model, the notebook 2_GenerateInPaintingImages.ipynb is used to run the inference on the endpoints and generate results:

raw_image = Image.open("images/speaker.png").convert("RGB")

mask_image = Image.open('images/speaker_mask.png').convert('RGB')

prompt_fr = "table and chair with books"

prompt_bg = "window and couch, table"

negative_prompt = "longbody, lowres, bad anatomy, bad hands, missing fingers, extra digit, fewer digits, cropped, worst quality, low quality, letters"

inputs = {}

inputs["image"] = np.array(raw_image)

inputs["mask"] = np.array(mask_image)

inputs["prompt_fr"] = prompt_fr

inputs["prompt_bg"] = prompt_bg

inputs["negative_prompt"] = negative_prompt

predictor_inpainting = PyTorchPredictor(endpoint_name=INPAINTING_ENDPOINT_NAME,

serializer=JSONSerializer(),

deserializer=JSONDeserializer())

output_array = predictor_inpainting.predict(inputs, initial_args={'Accept': 'application/json'})

gai_image = Image.fromarray(np.array(output_array[0]).astype(np.uint8))

gai_background = Image.fromarray(np.array(output_array[1]).astype(np.uint8))

gai_mask = Image.fromarray(np.array(output_array[2]).astype(np.uint8))

post_image = Image.fromarray(np.array(output_array[3]).astype(np.uint8))

# save the generated image using PIL Image

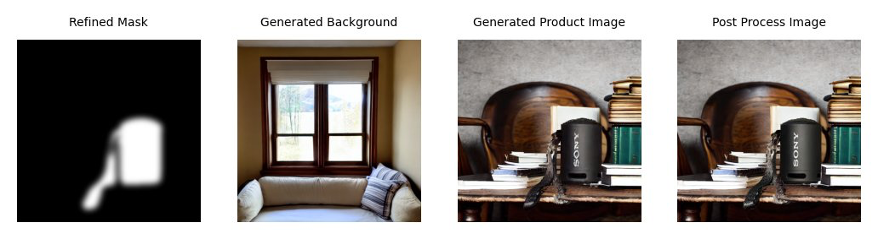

post_image.save('images/speaker_generated.png')The following figure shows the refined mask, generated background, generated product image, and postprocessed image.

The generated product image uses the following prompts:

- Background generation – “chair, couch, window, indoor”

- Inpainting – “besides books”

Clean up

In this post, we use two GPU-enabled SageMaker endpoints, which contributes to the majority of the cost. These endpoints should be turned off to avoid extra cost when the endpoints are not being used. We have provided a notebook, 3_CleanUp.ipynb, which can assist in cleaning up the endpoints. We also use a SageMaker notebook to host the models and run inference. Therefore, it’s good practice to stop the notebook instance if it’s not being used.

Conclusion

Generative AI models are generally large-scale ML models that require specific resources to run efficiently. In this post, we demonstrated, using an advertising use case, how SageMaker endpoints offer a scalable and managed environment for hosting generative AI models such as the text-to-image foundation model Stable Diffusion. We demonstrated how two models can be hosted and run as needed, and multiple models can also be hosted from a single endpoint. This eliminates the complexities associated with infrastructure provisioning, scalability, and monitoring, enabling organizations to focus solely on deploying their models and serving predictions to solve their business challenges. With SageMaker endpoints, organizations can efficiently deploy and manage multiple models within a unified infrastructure, achieving optimal resource utilization and reducing operational overhead.

The detailed code is available on GitHub. The code demonstrates the use of AWS CloudFormation and the AWS Cloud Development Kit (AWS CDK) to automate the process of creating SageMaker notebooks and other required resources.

About the authors

Fabian Benitez-Quiroz is a IoT Edge Data Scientist in AWS Professional Services. He holds a PhD in Computer Vision and Pattern Recognition from The Ohio State University. Fabian is involved in helping customers run their machine learning models with low latency on IoT devices and in the cloud across various industries.

Fabian Benitez-Quiroz is a IoT Edge Data Scientist in AWS Professional Services. He holds a PhD in Computer Vision and Pattern Recognition from The Ohio State University. Fabian is involved in helping customers run their machine learning models with low latency on IoT devices and in the cloud across various industries.

Romil Shah is a Sr. Data Scientist at AWS Professional Services. Romil has more than 6 years of industry experience in computer vision, machine learning, and IoT edge devices. He is involved in helping customers optimize and deploy their machine learning models for edge devices and on the cloud. He works with customers to create strategies for optimizing and deploying foundation models.

Romil Shah is a Sr. Data Scientist at AWS Professional Services. Romil has more than 6 years of industry experience in computer vision, machine learning, and IoT edge devices. He is involved in helping customers optimize and deploy their machine learning models for edge devices and on the cloud. He works with customers to create strategies for optimizing and deploying foundation models.

Han Man is a Senior Data Science & Machine Learning Manager with AWS Professional Services based in San Diego, CA. He has a PhD in Engineering from Northwestern University and has several years of experience as a management consultant advising clients in manufacturing, financial services, and energy. Today, he is passionately working with key customers from a variety of industry verticals to develop and implement ML and GenAI solutions on AWS.

Han Man is a Senior Data Science & Machine Learning Manager with AWS Professional Services based in San Diego, CA. He has a PhD in Engineering from Northwestern University and has several years of experience as a management consultant advising clients in manufacturing, financial services, and energy. Today, he is passionately working with key customers from a variety of industry verticals to develop and implement ML and GenAI solutions on AWS.