Migrating to the cloud is an essential step for modern organizations aiming to capitalize on the flexibility and scale of cloud resources. Tools like Terraform and AWS CloudFormation are pivotal for such transitions, offering infrastructure as code (IaC) capabilities that define and manage complex cloud environments with precision. However, despite its benefits, IaC’s learning curve, and the complexity of adhering to your organization’s and industry-specific compliance and security standards, could slow down your cloud adoption journey. Organizations typically counter these hurdles by investing in extensive training programs or hiring specialized personnel, which often leads to increased costs and delayed migration timelines.

Generative artificial intelligence (AI) with Amazon Bedrock directly addresses these challenges. Amazon Bedrock is a fully managed service that offers a choice of high-performing foundation models (FMs) from leading AI companies like AI21 Labs, Anthropic, Cohere, Meta, Stability AI, and Amazon with a single API, along with a broad set of capabilities to build generative AI applications with security, privacy, and responsible AI. Amazon Bedrock empowers teams to generate Terraform and CloudFormation scripts that are custom fitted to organizational needs while seamlessly integrating compliance and security best practices. Traditionally, cloud engineers learning IaC would manually sift through documentation and best practices to write compliant IaC scripts. With Amazon Bedrock, teams can input high-level architectural descriptions and use generative AI to generate a baseline configuration of Terraform scripts. These generated scripts are tailored to meet your organization’s unique requirements while conforming to industry standards for security and compliance. These scripts serve as a foundational starting point, requiring further refinement and validation to make sure they meet production-level standards.

This solution not only accelerates the migration process but also provides a standardized and secure cloud infrastructure. Additionally, it offers beginner cloud engineers initial script drafts as standard templates to build upon, facilitating their IaC learning journey.

As you navigate the complexities of cloud migration, the need for a structured, secure, and compliant environment is paramount. AWS Landing Zone addresses this need by offering a standardized approach to deploying AWS resources. This makes sure your cloud foundation is built according to AWS best practices from the start. With AWS Landing Zone, you eliminate the guesswork in security configurations, resource provisioning, and account management. It’s particularly beneficial for organizations looking to scale without compromising on governance or control, providing a clear path to a robust and efficient cloud setup.

In this post, we show you how to generate customized, compliant IaC scripts for AWS Landing Zone using Amazon Bedrock.

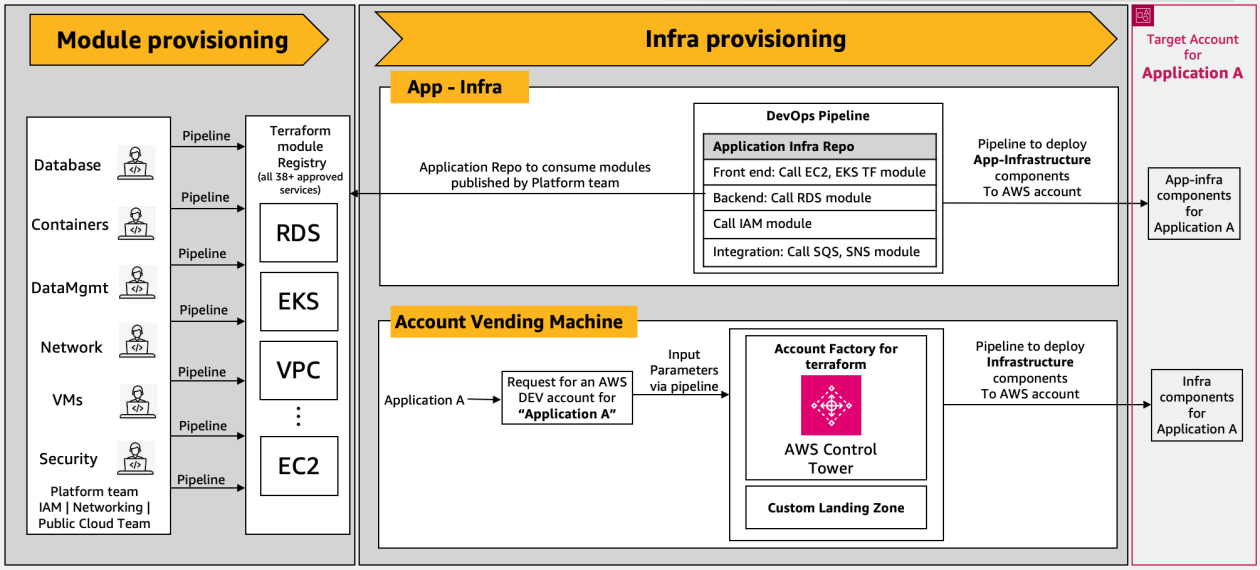

AWS Landing Zone architecture in the context of cloud migration

AWS Landing Zone can help you set up a secure, multi-account AWS environment based on AWS best practices. It provides a baseline environment to get started with a multi-account architecture, automate the setup of new accounts, and centralize compliance, security, and identity management. The following is an example of a customized Terraform-based AWS Landing Zone solution, in which each application resides in its own AWS account.

The high-level workflow includes the following components:

- Module provisioning – Different platform teams across various domains, such as databases, containers, data management, networking, and security, develop and publish certified or custom modules. These are delivered through pipelines to a Terraform private module registry, which is maintained by the organization for consistency and standardization.

- Account vending machine layer – The account vending machine (AVM) layer uses either AWS Control Tower, AWS Account Factory for Terraform (AFT), or a custom landing zone solution to vend accounts. In this post, we refer to these solutions collectively as the AVM layer. When application owners submit a request to the AVM layer, it processes the input parameters from the request to provision a target AWS account. This account is then provisioned with tailored infrastructure components through AVM customizations, which include AWS Control Tower customizations or AFT customizations.

- Application infrastructure layer – In this layer, application teams deploy their infrastructure components into the provisioned AWS accounts. This is achieved by writing Terraform code within an application-specific repository. The Terraform code calls upon the modules previously published to the Terraform private registry by the platform teams.

Overcoming on-premises IaC migration challenges with generative AI

Teams maintaining on-premises applications often encounter a learning curve with Terraform, a key tool for IaC in AWS environments. This skill gap can be a significant hurdle in cloud migration efforts. Amazon Bedrock, with its generative AI capabilities, plays an essential role in mitigating this challenge. It facilitates the automation of Terraform code creation for the application infrastructure layer, empowering teams with limited Terraform experience to make an efficient transition to AWS.

Amazon Bedrock generates Terraform code from architectural descriptions. The generated code is custom and standardized based on organizational best practices, security, and regulatory guidelines. This standardization is made possible by using advanced prompts in conjunction with Knowledge Bases for Amazon Bedrock, which stores information on organization-specific Terraform modules. This solution uses Retrieval Augmented Generation (RAG) to enrich the input prompt to Amazon Bedrock with details from the knowledge base, making sure the output Terraform configuration and README contents are compliant with your organization’s Terraform best practices and guidelines.

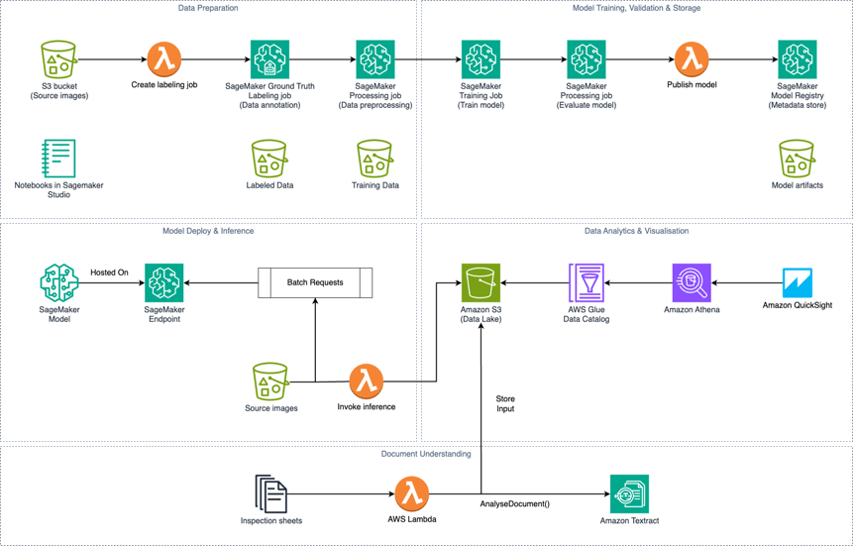

The following diagram illustrates this architecture.

The workflow consists of the following steps:

- The process begins with account vending, where application owners submit a request for a new AWS account. This invokes the AVM, which processes the request parameters to provision the target AWS account.

- An architecture description for an application slated for migration is passed as one of the inputs to the AVM layer.

- After the account is provisioned, AVM customizations are applied. This can include AWS Control Tower customizations or AFT customizations that set up the account with the necessary infrastructure components and configurations in line with organizational policies.

- In parallel, the AVM layer invokes a Lambda function to generate Terraform code. This function enriches the architecture description with a customized prompt, and utilizes RAG to further enhance the prompt with organization-specific coding guidelines from the Knowledge Base for Bedrock. This Knowledge Base includes tailored best practices, security guardrails, and guidelines specific to the organization. See an illustrative example of organization specific Terraform module specifications and guidelines uploaded to the Knowledge Base.

- Before deployment, the initial draft of the Terraform code is thoroughly reviewed by cloud engineers or an automated code review system to confirm that it meets all technical and compliance standards.

- The reviewed and updated Terraform scripts are then used to deploy infrastructure components into the newly provisioned AWS account, setting up compute, storage, and networking resources required for the application.

Solution overview

The AWS Landing Zone deployment uses a Lambda function for generating Terraform scripts from architectural inputs. This function, which is central to the operation, translates these inputs into compliant code, using Amazon Bedrock and Knowledge Bases for Amazon Bedrock. The output is then stored in a GitHub repository, corresponding to the specific application in migration. The following sections detail the prerequisites and specific steps needed to implement this solution.

Prerequisites

You should have the following:

Configure the Lambda function to generate custom code

This Lambda function is a key component in automating the creation of customized, compliant Terraform configurations for AWS services. It commits the generated configurations directly to a designated GitHub repository, aligning with organizational best practices. For the function code, refer to the following GitHub repo. For creating lambda function, please follow instructions.

The following diagram illustrates the workflow of the function.

The workflow includes the following steps:

- The function is invoked by an event from the AVM layer, containing the architecture description.

- The function retrieves and uses Terraform module definitions from the knowledge base.

- The function invokes the Amazon Bedrock model twice, following recommended prompt engineering guidelines. The function applies RAG to enrich the input prompt with the Terraform module information, making sure the output code meets organizational best practices.

- First, generate Terraform configurations following organizational coding guidelines and include Terraform module details from the knowledge base. For example, the prompt could be: “Generate Terraform configurations for AWS services. Follow security best practices by using IAM roles and least privilege permissions. Include all necessary parameters, with default values. Add comments explaining the overall architecture and the purpose of each resource.”

- Second, create a detailed README file. For example: “Generate a detailed README for the Terraform configuration based on AWS services. Include sections on security improvements, cost optimization tips following the AWS Well-Architected Framework. Also, include detailed Cost Breakdown for each AWS service used with hourly rates and total daily and monthly costs.”

- It commits the generated Terraform configuration and the README to the GitHub repository, providing traceability and transparency.

- Lastly, it responds with success, including URLs to the committed GitHub files, or returns detailed error information for troubleshooting.

Configure Knowledge Bases for Amazon Bedrock

Follow these steps to set up your knowledge base in Amazon Bedrock:

- On the Amazon Bedrock console, choose Knowledge base in the navigation pane.

- Choose Create knowledge base.

- Enter a clear and descriptive name that reflects the purpose of your knowledge base, such as AWS Account Setup Knowledge Base For Amazon Bedrock.

- Assign a pre-configured IAM role with the necessary permissions. It’s typically best to let Amazon Bedrock create this role for you to make sure it has the correct permissions.

- Upload a JSON file to an S3 bucket with encryption enabled for security. This file should contain a structured list of AWS services and Terraform modules. For the JSON structure, use the following example from the GitHub repository.

- Choose the default embeddings model.

- Allow Amazon Bedrock to create and manage the vector store for you in Amazon OpenSearch Service.

- Review the information for accuracy. Pay special attention to the S3 bucket URI and IAM role details.

- Create your knowledge base.

After you deploy and configure these components, when your AWS Landing Zone solution invokes the Lambda function, the following files are generated:

- A Terraform configuration file – This file specifies the infrastructure setup.

- A comprehensive README file – This file documents the security standards embedded within the code, confirming that they align with the security practices outlined in the initial sections. Additionally, this README includes an architectural summary, cost optimization tips, and a detailed cost breakdown for the resources described in the Terraform configuration.

The following screenshot shows an example of the Terraform configuration file.

The following screenshot shows an example of the README file.

Clean up

Complete the following steps to clean up your resources:

- Delete the Lambda function if it’s no longer required.

- Empty and delete the S3 bucket used for Terraform state storage.

- Remove the generated Terraform scripts and README file from the GitHub repo.

- Delete the knowledge base if it’s no longer needed.

Conclusion

The generative AI capabilities of Amazon Bedrock not only streamline the creation of compliant Terraform scripts for AWS deployments, but also act as a pivotal learning aid for beginner cloud engineers transitioning on-premises applications to AWS. This approach accelerates the cloud migration process and helps you adhere to best practices. You can also use the solution to provide value after the migration, enhancing daily operations such as ongoing infrastructure and cost optimization. Although we primarily focused on Terraform in this post, these principles can also enhance your AWS CloudFormation deployments, providing a versatile solution for your infrastructure needs.

Ready to simplify your cloud migration process with generative AI in Amazon Bedrock? Begin by exploring the Amazon Bedrock User Guide to understand how it can streamline your organization’s cloud journey. For further assistance and expertise, consider using AWS Professional Services to help you streamline your cloud migration journey and maximize the benefits of Amazon Bedrock.

Unlock the potential for rapid, secure, and efficient cloud adoption with Amazon Bedrock. Take the first step today and discover how it can enhance your organization’s cloud transformation endeavors.

About the Author

Ebbey Thomas specializes in strategizing and developing custom AWS Landing Zone resources with a focus on using generative AI to enhance cloud infrastructure automation. In his role at AWS Professional Services, Ebbey’s expertise is central to architecting solutions that streamline cloud adoption, providing a secure and efficient operational framework for AWS users. He is known for his innovative approach to cloud challenges and his commitment to driving forward the capabilities of cloud services.