Welcome to the Generative AI Report round-up feature here on insideBIGDATA with a special focus on all the new applications and integrations tied to generative AI technologies. We’ve been receiving so many cool news items relating to applications and deployments centered on large language models (LLMs), we thought it would be a timely service for readers to start a new channel along these lines. The combination of a LLM, fine tuned on proprietary data equals an AI application, and this is what these innovative companies are creating. The field of AI is accelerating at such fast rate, we want to help our loyal global audience keep pace.

Google Cloud and Hugging Face Announce Strategic Partnership to Accelerate Generative AI and ML Development

Google Cloud and Hugging Face announced a new strategic partnership that will allow developers to utilize Google Cloud’s infrastructure for all Hugging Face services, and will enable training and serving of Hugging Face models on Google Cloud.

The partnership advances Hugging Face’s mission to democratize AI and furthers Google Cloud’s support for open source AI ecosystem development. With this partnership, Google Cloud becomes a strategic cloud partner for Hugging Face, and a preferred destination for Hugging Face training and inference workloads. Developers will be able to easily utilize Google Cloud’s AI-optimized infrastructure including compute, tensor processing units (TPUs), and graphics processing units (GPUs) to train and serve open models and build new generative AI applications.

“Google Cloud and Hugging Face share a vision for making generative AI more accessible and impactful for developers,” said Thomas Kurian, CEO at Google Cloud. “This partnership ensures that developers on Hugging Face will have access to Google Cloud’s purpose-built AI platform, Vertex AI, along with our secure infrastructure, which can accelerate the next generation of AI services and applications.”

“From the original Transformers paper to T5 and the Vision Transformer, Google has been at the forefront of AI progress and the open science movement,” said Clement Delangue, CEO of Hugging Face. “With this new partnership, we will make it easy for Hugging Face users and Google Cloud customers to leverage the latest open models together with leading optimized AI infrastructure and tools from Google Cloud including Vertex AI and TPUs to meaningfully advance developers ability to build their own AI models.”

HYCU, Inc. Leverages Anthropic to Revolutionize Data Protection through Generative AI Technology

HYCU, Inc., a leader in data protection as a service and one of the fastest growing companies in the industry, announced the HYCU Generative AI Initiative. This project seamlessly integrates generative AI technology, including Anthropics’ AI assistant Claude with HYCU’s R-Cloud data protection platform, redefining the development process of data protection integrations and creating an easy to use way to create SaaS integrations.

HYCU R-Cloud includes the world’s first low-code development platform for data protection that enables SaaS companies and service providers to deliver application-native backup and recovery efficiently and rapidly. HYCU R-Cloud has been recognized for setting the bar for SaaS data backup and protection for its ease of use of integration for both end users and SaaS providers.

“This development with Anthropic’s frontier generative AI model Claude is more than an integration; it’s a leap forward in the future of data protection,” said Simon Taylor, Founder and CEO, HYCU, Inc. “By harnessing AI, we’re not only accelerating our development processes but also reinforcing our commitment to security and operational efficiency. We’re excited to take R-Cloud to the next level and pioneer this space and set new standards for the industry.”

Pricefx Announces Additional Generative AI Capabilities Across Its Award-Winning Pricing Platform

Pricefx, a leader in cloud-native pricing software, announced it will be incorporating new Generative AI (GenAI) capabilities in its award-winning pricing platform in 2024. The new set of GenAI capabilities will bring conversational experiences to pricing professionals, creating the ultimate simplification of user interactions on the Pricefx platform.

GenAI will power conversational user experiences by allowing users to control the application and receive intended results of various forms with a chat-like experience. For example, a user can describe their request in everyday words and the software will provide the answer and guide them to application screens populated with inputs automatically interpreted by AI. The respective results are summarized to guide users towards actions carrying the highest business impact faster.

These new GenAI features extend the AI technology capabilities already available on the Pricefx platform. Pricefx leverages a wide AI technological landscape for price optimization tasks, ranging from traditional machine learning to agent-based AI and Generative AI. Customers can benefit from Pricefx’s uniquely transparent AI-powered price optimization results as well as from an open and composable AI framework to build flexible and futureproof applications utilizing a customer’s own data science investments. With the introduction of new conversational GenAI capabilities, Pricefx further extends its natively integrated AI, bringing more business advantages to its customers.

“These GenAI features confirm Pricefx’s steady commitment to bringing AI-enabled solutions to market,” said Billy Graham, Chief Product Officer for Pricefx. “Our speed and agility in identifying and adopting relevant technology that brings value to pricing continues to lead the industry. From being the first to deliver a cloud-native SaaS pricing platform to bringing AI-powered price optimization solutions to market, and now natively integrating conversational GenAI into our software, Pricefx is helping customers easily achieve optimal business outcomes that outpace the competition.”

Typeface Announces GA of its New Multimodal AI Content Hub, Expands into Video with TensorTour Acquisition

Typeface, the generative AI platform for enterprise content creation, announced the general availability of its new Multimodal Content Hub, featuring significant advancements that make AI content workflows more accessible to all. The company also announced the acquisition of TensorTour, integrating their advanced AI algorithms, domain-specific models, and deep expertise in multimedia AI content, such as video, audio, and more. Typeface’s proprietary Blend AI, which already leverages top-tier AI platforms from OpenAI, Microsoft, Google Cloud, and more, is now broadening its partner ecosystem with new integrations across leading enterprise applications. This platform expansion and strategic acquisition mark a major step forward in advancing deeply specialized, multimodal AI workflows for widespread enterprise use.

“Enterprises are eager to adopt new mediums with generative AI that understand their unique brand, data, and industry. Our acquisition of TensorTour deepens our expertise in new storytelling mediums and domain-specific AI models and workflows. Coupled with the significant expansion of Typeface’s Multimodal Content Hub and growing partner ecosystem, we’re investing in cutting-edge technology and world-class talent to stay at the forefront of AI innovation to create entirely new, data-enriched content workflows integrated across the entire enterprise fabric,” said Abhay Parasnis, Founder and CEO at Typeface.

Swimm Launches World’s Most Advanced Contextualized Coding Assistant for Accurate and Instant Code Knowledge

Swimm, a leading GenAI-powered coding assistant for contextualized code understanding, announced the launch of /ask Swimm, the most comprehensive solution available for enterprise software development teams that combines an AI-powered chat and human input to provide personalized and accurate code knowledge instantly.

Software developers are continuously trying to ship code faster yet often struggle to find accurate answers to their questions due to a lack of good code documentation hygiene and limited contextualized code understanding which can render answers that are too generic and unhelpful. In a recent survey conducted by Swimm, 73% of developers surveyed said that code documentation is important to their organization’s productivity; however, 37% said they spend 5 or more hours a week looking for information to understand code or to answer questions about it. This highlights the current gap that exists between the need for effective code understanding and documentation, which is often required when debugging issues with code or when onboarding new developers, and the tools that are currently available.

/ask Swimm is an AI-powered coding assistant providing developers with a multilayered contextual understanding of code, enabling them to significantly boost productivity across the entire development lifecycle. The chat is dynamic and personalized to an organization’s specific codebase, documentation, user interactions and other 3rd party tools. A fully contextualized conversational chat within the IDE instantly enables developers to answer any questions about documentation, code, files, repos, or even entire software ecosystems. To gain context, /ask Swimm incorporates factors that are not evident in the code itself, such as business decisions, product design considerations, limitations that were the basis for roads not taken, etc. /ask Swimm automatically captures and updates code-related knowledge in the process, while improving over time with continuous feedback and user-generated documents.

“Some of the biggest names in tech, including Microsoft (Copilot), Google (Duet AI), Amazon (CodeWhisperer), are building code assistance tools. They’re competing on the merits of their analysis capabilities and the LLMs they’re using, but no matter how good they are, a tool doesn’t know what it doesn’t know because it’s lacking the relevant context,” said Oren Toledano, CEO and Co-founder at Swimm. “To provide the most complete picture to engineers, we’ve developed /ask Swimm which is the logical next step in the evolution of code understanding. Context is invaluable especially when refactoring legacy code or trying to understand complex flows and processes in a codebase. By keeping documentation and context up to date with existing code, processing it and feeding it in time to the LLMs, Swimm has solved one of the biggest challenges developers face today.”

SnapLogic Announces GenAI Builder: Revolutionizing Enterprise Applications and Bringing Large Language Models to Every Employee

SnapLogic, a leader in generative integration, unveiled GenAI Builder, a no-code generative AI application development product for enterprise applications and services. Uniquely compatible with both legacy mainframe data, modern databases and APIs, GenAI Builder leverages conversational AI to transform and simplify customer, employee and partner digital experiences.

Generative AI is projected to have a profound impact on global business, with McKinsey estimating it could “add the equivalent of $4.4 trillion annually” through improvement of customer interactions, automation of business processes, conversational business intelligence, and IT acceleration via code generation. Unfortunately, companies seeking to capitalize on generative AI face prohibitive obstacles. McKinsey cites a lack of available AI skills, accuracy of results, and concerns about privacy and security as possible barriers to generative AI adoption in enterprises. SnapLogic plans to eliminate those obstacles.

As the latest addition to SnapLogic’s AI suite, GenAI Builder allows organizations to integrate AI with enterprise data to securely enhance the efficiency, accuracy, and personalization of data for every enterprise employee. This applies to multiple types of data, whether in the cloud or on-prem, and can be applied to critical use cases from customer support automation, data analysis and reporting, contract and document review, and personalized marketing. GenAI builder puts the power of LLMs, and the scale of AI, where it matters most; in the hands of every enterprise employee.

“We aren’t just stepping into the future with GenAI Builder, we are teleporting businesses there,” said Jeremiah Stone, Chief Technology Officer at SnapLogic. “Product and IT teams now have the ability to rapidly add high-performing conversational interfaces to their most important digital experiences, changing them from basic tools into intelligent collaborative systems, creating an entirely new realm of capabilities for employees, customers, and partners, delivering unprecedented business value.”

“Instead of relying on multiple experienced python coders working for days and weeks to create a LLM-based solution, any employee – including those in finance, marketing, HR, and other business departments – can now use GenAI Builder to create solutions in a matter of hours,” said Greg Benson, Chief Scientist at SnapLogic. “By using natural language prompts to create powerful generative AI solutions while simultaneously connecting disparate data sources, global businesses can save millions and accelerate their LLM projects tenfold.”

Deci Works With Qualcomm to Make Generative AI Accessible for Wide Range of Applications

Deci, the deep learning company harnessing artificial intelligence (AI) to build AI, announced it is collaborating with Qualcomm Technologies, Inc. to introduce advanced Generative Artificial Intelligence (AI) models tailored for the Qualcomm® Cloud AI 100, Qualcomm Technologies’ performance and cost-optimized AI inference solution designed for Generative AI and large language models (LLMs). This working relationship between the two companies is designed to make AI accessible for a wider range of AI-powered applications, resulting in the democratization of Generative AI’s transformative power for developers everywhere.

“Together with Qualcomm Technologies we are pushing the boundaries of what’s possible in AI efficiency and performance” said Yonatan Geifman, CEO and co-founder of Deci. “Our joint efforts streamline the deployment of advanced AI models on Qualcomm Technologies’ hardware, making AI more accessible and cost-effective, and economically viable for a wider range of applications. Our work together is a testament to our vision of making the transformational power of generative AI available to all.”

Typeform Launches ‘Formless’: The AI-Powered Form Builder That Simulates Real Conversations

Typeform, the intuitive form builder and conversational data collection platform, announced the public launch of Formless, an AI form builder powered by leading AI systems from OpenAI. With Formless, users can collect structured data through two-way conversations with forms that ask questions, as well as answer respondent questions. Formless enables companies to collect high-quality customer data at scale, all while delivering an engaging customer experience.

Gathering zero-party data, defined as information that customers voluntarily share with companies, is critical to creating exceptional customer experiences today; however, the stakes for data collection are higher than ever. McKinsey & Company research shows that 71% of consumers expect companies to deliver personalized interactions , and 76% get frustrated when this doesn’t happen. Focused on harnessing the power of artificial intelligence to create human-centered web experiences, Typeform is providing companies with innovative solutions that balance business needs with customer expectations.

“At Typeform, we believe in a world where businesses give as much to people as they’re trying to get, and we’ve been diligently crafting this vision into reality,” said David Okuniev, co-founder, Typeform. “The beauty of Formless is that it enables companies to get great data, while also giving respondents a great experience. In an increasingly digital world, people don’t want interactions that make them feel like just a number. Formless offers an ideal blend of the machine-driven efficiency and human-like personalization that today’s consumers crave.”

Airbyte and Vectara Partner to Simplify Data Access for Generative AI Applications

Airbyte, creators of a leading open-source data movement infrastructure, has partnered with Vectara, the trusted Generative AI (GenAI) platform, to deliver an integration that makes it easier for developers to build scalable enterprise-grade GenAI applications using Large Language Models (LLMs).

“What sets Vectara apart is that its technology works on businesses’ own data. By integrating with Airbyte, it’s now easier and more efficient, with access to more than 350 data sources,” said Michel Tricot, co-founder and CEO, Airbyte. ”It’s a breakthrough that gives Vectara users greater productivity and accuracy with fewer hallucinations in their results.”

Vectara delivers generative AI capabilities for developers via an easy-to-use API. Often referred to as “RAG-in-a-box”, Vectara’s platform simplifies the development of GenAI applications by taking care of document chunking, embedding, vector storage, retrieval, and summarization.

“Vectara is the first Retrieval Augmented Generation (RAG) as-a-service to integrate with Airbyte, which makes it super simple to move volumes of data for scalable GenAI applications,” said Bader Hamdan, Vectara’s ecosystem chief. “Value co-creation is intrinsic to Vectara’s ecosystem strategy, and this integration allows customers to combine Airbyte’s scalable data ingestion functionality with Vectara’s trusted GenAI Platform to create robust and secure RAG-enabled enterprise applications.”

Pecan AI Introduces Predictive GenAI to Transform Enterprise AI Efforts

Pecan AI, a leader in AI-based predictive analytics for data analysts and business teams, today announced Predictive GenAI, a unique combination of predictive analytics and generative AI that kickstarts fast, easy predictive modeling. Predictive GenAI marks a new step in the evolution of enterprise AI adoption, where generative AI and predictive AI work together to unlock the value of businesses’ data.

Amidst the growing hype of GenAI in the enterprise, actual adoption is still lagging. Many businesses have yet to unlock a true use case that will drive better customer, employee, and product innovation outcomes. Today, Pecan’s Predictive GenAI creates a significant opportunity for scalable AI-powered business value. Pecan’s Predictive GenAI empowers users to translate business concerns into a new predictive model that can solve the issue faster than ever.

“When used alone, ChatGPT and other similar tools based on large language models can’t solve predictive business needs,” said Zohar Bronfman, CEO and co-founder, Pecan AI. “Our mission has always been to democratize data science, and today, we’ve identified a simple way to marry the power of predictive analytics and GenAI. Pecan’s Predictive GenAI is an industry-first solution that rapidly advances AI adoption, solves real business challenges, and improves outcomes.”

DataStax Launches New Data API to Dramatically Simplify GenAI Application Development

DataStax, the company that powers generative AI (GenAI) applications with relevant, scalable data, announced the general availability of its Data API, a one-stop API for GenAI, that provides all the data and a complete stack for production GenAI and retrieval augmented generation (RAG) applications with high relevancy and low latency. Also debuting today is a completely updated developer experience for DataStax Astra DB, the best vector database for building production-level AI applications.

The new vector Data API and experience makes the proven, petabyte-scale power of Apache Cassandra® available to JavaScript, Python, or full-stack application developers in a more intuitive experience for AI development. It is specifically designed for ease of use, while offering up to 20% higher relevancy, 9x higher throughput, and up to 74x faster response times than Pinecone, another vector database, by using the JVector search engine. It introduces an intuitive dashboard, efficient data loading and exploration tools, and seamless integration with leading AI and machine learning (ML) frameworks.

“Astra DB is ideal for JavaScript and Python developers, simplifying vector search and large-scale data management, putting the power of Apache Cassandra behind a user-friendly but powerful API,” said Ed Anuff, chief product officer, DataStax. “This release redefines how software engineers build GenAI applications, offering a streamlined interface that simplifies and accelerates the development process for AI engineers.”

Databricks Announces Data Intelligence Platform for Communications, Offering Providers a Data Lakehouse with Generative AI Capabilities

Databricks, the Data and AI company, launched the Data Intelligence Platform for Communications, the world’s first unified data and AI platform tailored for telecommunications carriers and network service providers. With the Data Intelligence Platform for Communications, Communication Service Providers (CSPs) benefit from a unified foundation for their data and AI, and can gain a holistic view of their networks, operations, and customer interactions without sacrificing data privacy or confidential IP. Built on an open lakehouse architecture, the Data Intelligence Platform for Communications combines industry-leading data management, governance, and data sharing with enterprise-ready generative AI and machine learning (ML) tools.

The Communications industry is undergoing one of the most significant periods of change in its history – marked by a dramatic increase in global traffic and a need for more network equipment, compounded by consumer demands for higher quality services and customer experiences (CX). Databricks created the Data Intelligence Platform for Communications to help organizations navigate these dynamics, empowering CSPs to better forecast market trends, predict demand patterns, monetize their data as a product, and democratize data insights to all employees, regardless of technical expertise. Early adopters of the Data Intelligence Platform include industry leaders like AT&T, which leverages the platform to protect its customers from fraud and increase operational efficiencies.

“The need for a modern and unified data analytics and AI platform has never been greater. The Data Intelligence Platform for Communications was designed to meet the dynamic needs of customers at scale, while delivering enterprise-grade security and intelligently reducing costs to operate,” said Steve Sobel, Global Industry Leader for Communications, Media and Entertainment at Databricks. “It seamlessly creates avenues for CSPs to personalize, monetize, and innovate in the communications industry to decrease churn, improve service, and create new revenue streams with data they already have.”

Gen-AI tools deliver unprecedented, near-perfect data accuracy

Stratio BD, a leading generative AI and data specialist, announced that generative AI tools are 99% accurate when used with Stratio Business Semantic Data Layer and can be trusted by enterprises to inform enterprise decision making, following a landmark test. As a result, employees with any level of technical expertise can use generative AI tools to run complex data queries and immediately receive accurate answers, provided Stratio’s Business Semantic Data Layer is also applied.

The results, produced for the first time by linking ChatGPT analysis of independent datasets with Stratio BD’s Business Semantic Data Layer, confirm that generative tools can now deliver reliable results from data analysis and interrogation. This means that businesses can rely on gen AI analysis to produce accurate answers, allowing employees with any level of technical expertise to ask data-related questions in their natural language, and receive answers with a high level of accuracy, immediately.

The test conditions replicate a benchmark test previously conducted by data.world two months ago, which resulted in LLMs delivering three times greater efficiencies when connected to a knowledge graph. This test was subsequently validated in December 2023 by dbt labs, which saw the accuracy rate hit 69%. In this latest replication of the test, Stratio BD found that ChatGPT 4 was able to accurately answer data queries ran on large amounts of data, up to 99% of the time.

The significant increase in accuracy delivery was the direct result of using Stratio BD’s Business Semantic Data Layer, which connects large data sets with business meaning via semantic ontologies and a knowledge graph, so that they can be easily accessed and interpreted by generative AI tools and employees alike. The benchmark test involved asking ChatGPT-4 to answer a set of insurance related business questions, such as ‘How many claims do we have?’ and ‘What is the average time to settle a claim?’ , using information from a standardised dataset.

ChatGPT was first asked to answer these questions without the support of Stratio BD’s Business Semantic Data Layer and achieved an accuracy rate of 17%. The LLM was then asked the same questions with the support of a 3rd party knowledge graph and reached an accuracy rate of 69%. However, when using Stratio’s solution this figure increased dramatically to up to 99%.

Román Martín, Chief Technology Officer at Stratio BD, said: “We’ve crossed a new frontier in the application of generative AI tools for enterprise use. Previously, business concerns about the reliability of AI data interrogation were widespread. Delivering an accuracy rate of 99% is clearly a high bar for reliability when it comes to business data use cases and demonstrates that generative AI tools can be trusted to run complex data queries at an enterprise level, provided they have access to high quality data. By linking ChatGPT to our Semantic Business Data Layer solution, we can deliver highly accurate answers, without errors or hallucinations, and have solved the largest problem associated with LLMs. Businesses across every industry now have a viable way to significantly bolster productivity by automating otherwise lengthy tasks and queries and interrogating their business data quickly and effectively.”

Pinecone reinvents the vector database to let companies build knowledgeable AI

Pinecone, a leading vector database company, announced a revolutionary vector database that lets companies build more knowledgeable AI applications: Pinecone serverless. Multiple innovations including a first-of-its-kind architecture and a truly serverless experience deliver up to 50x cost reductions and eliminate infrastructure hassles, allowing companies to bring remarkably better GenAI applications to market faster.

One of the keys to success is providing large amounts of data on-demand to the Large Language Models (LLMs) inside GenAI applications. Research from Pinecone found that simply making more data available for context retrieval reduces the frequency of unhelpful answers from GPT-4 by 50%[1], even on information it was trained on. The effect is even greater for questions related to private company data. Additionally, the research found the same level of answer quality can be achieved with other LLMs, as long as enough data is made available. This means companies can significantly improve the quality of their GenAI applications and have a choice of LLMs just by making more data (or “knowledge”) available to the LLM. Yet storing and searching through sufficient amounts of vector data on-demand can be prohibitively expensive even with a purpose-built vector database, and practically impossible using relational or NoSQL databases.

Pinecone serverless is an industry-changing vector database that lets companies add practically unlimited knowledge to their GenAI applications. Since it is truly serverless, it completely eliminates the need for developers to provision or manage infrastructure and allows them to build GenAI applications more easily and bring them to market much faster. As a result, developers with use cases of any size can build more reliable, effective, and impactful GenAI applications with any LLM of their choice, leading to an imminent wave of incredible GenAI applications reaching the market. This wave has already started with companies like Notion, CS Disco, Gong and over a hundred others already using Pinecone Serverless.

“From the beginning, our mission has been to help every developer build remarkably better applications through the magic of vector search,” said Edo Liberty, Founder & CEO of Pinecone. “After creating the first and today’s most popular vector database, we’re taking another leap forward in making the vector database even more affordable and completely hassle-free.”

Revolutionizing Retail: Algolia Unveils Groundbreaking Generative AI for Shopping Experiences

Algolia, the end-to-end AI Search and Discovery platform, announced its strategic initiative aimed at advancing its Generative AI capabilities tailored specifically to deliver unparalleled search and discovery experiences for merchants and shoppers. This forward-thinking approach underscores Algolia’s commitment to creating innovative, customer-centric solutions that align with the dynamic needs of today’s online shoppers.

Algolia’s Chief Product Officer, Bharat Guruprakash, noted that the first place Generative AI will be used is in the search bar. “Shoppers are becoming more expressive when telling the search bar what they want. Generative AI in search uses Large Language Models and can understand what customers are asking for and then match items inside the product catalog much more accurately. However, to more fully enable e-commerce companies, we are packaging these capabilities into Generative Shopping Experiences to enrich and personalize a shopper’s journey.”

Relativity Announces Expansions to Relativity aiR, its Suite of Generative AI Solutions

Relativity, a global legal technology company, announced the limited availability launch of Relativity aiR for Review and shares plans to add products to Relativity aiR addressing use cases such as privilege review and case strategy. Relativity aiR is Relativity’s new suite of fit-for-purpose generative AI solutions that empower users to transform their approach to litigation and investigations.

“The generative AI that powers Relativity aiR is key to unlocking untapped potential that will transform legal work, enabling faster, high-quality insights and equipping our community to solve the most complex legal data challenges,” said Phil Saunders, CEO of Relativity. “The possibilities are immense and exciting, but what remains critically important as we embrace a future of next generation AI is usefulness. This is why every Relativity aiR solution is tailored to help our community solve challenges specific to their needs, backed by the deep insights gained from close collaboration with our customers and partners.”

p0 launches from stealth with $6.5m to stop catastrophic software failures using Generative AI

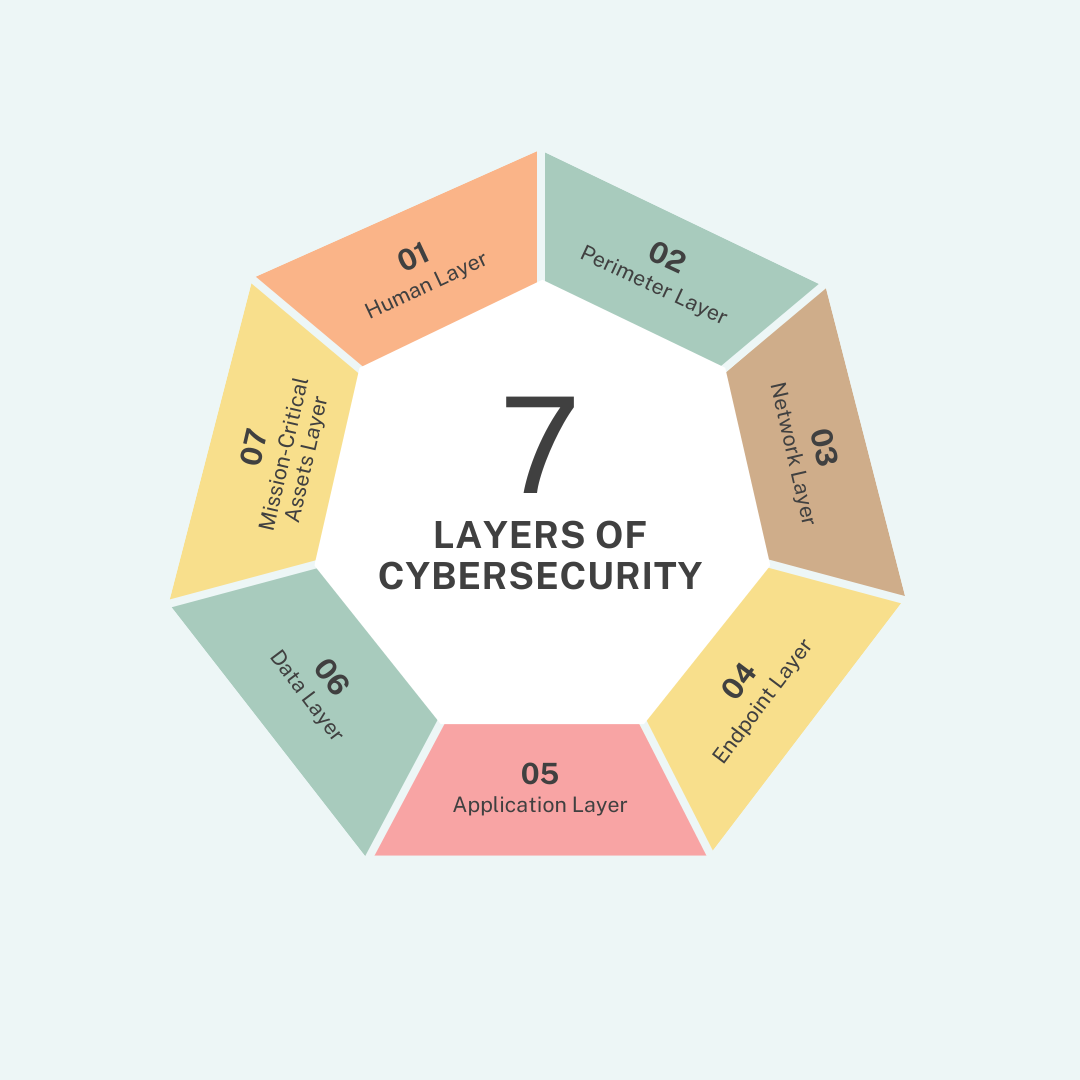

In an increasingly competitive and malicious environment vulnerabilities in enterprise codebases can lead to catastrophic security failures. Many times these can be fatal for businesses built on a foundation of customer trust and reliability. Data security is the most fundamental promise that a business can make to its users. Despite this, we have grown accustomed to hearing about massive data exploits on an almost daily basis. It is logical that recent research has found that 71% of software engineers are concerned about software reliability at their workplace.

p0 has launched from stealth and today announces that it has raised $6.5m from Lightspeed Venture Partners with participation from Alchemy Ventures to help stop catastrophic software failures. p0’s proprietary technology leverages Large Language Models (LLMs) to identify safety and security issues in software before it is ever run in a production environment. p0’s technology provides a single-click solution with no need for additional user configuration.

p0 can handle a wide range of software issues including data integrity issues and validation failures (including those affecting data security), alongside speed and timeout issues. p0 noiselessly surfaces intelligent and actionable output far more effectively than traditional software reliability and security solutions. Through developer teams simply connecting their Git code repositories to p0, they can rapidly gain insight beyond what traditional rule-based static analysis tools can provide – with the ability to run code scans in just 1-click.

Prakash Sanker, Co-Founder and CTO of p0 said: “Across the world, recent catastrophic software failures have led to real-world impact on human life and poor outcomes for businesses. At p0, we are determined to stop these safety and security issues affecting our society. Leveraging AI, we can go further than traditional software reliability and security tools to ensure society sees the benefits of technology with less risk.”

Avetta Announces Industry-First Generative AI Risk Assistant for Managing Contractor Compliance

Avetta®, a leading provider of supply chain risk management (SCRM) software, today announced AskAva, a generative AI-powered risk assistant that will accelerate contractor compliance and advance contractor safety and sustainability.

Unlike other solutions that run AI models over external datasets, AskAva is built on a new AI engine embedded in the platform, combining Avetta’s proprietary data with ChatGPT. AskAva adds more capabilities to Avetta’s award-winning Connect platform, enabling global organizations to automate contractor risk management at scale while educating their supply chain vendors about safety best practices.

In the United States, the contractor-to-employee ratio has increased 63% since 2019. In Europe, contractor positions surged 24% in 2022. Additionally, 60-70% of contracted work is outsourced to subcontractors. However, contractors represent a growing, disproportionate number of incidents, injuries and fatalities at worksites. Compared to their full-time counterparts, supply chain contractors typically have less safety resources and inadequate safety systems. AskAva works as a personalized safety assistant, leveraging generative AI to help large organizations reduce accidents in their supply chain.

“We have entered a new age of using AI to solve some of the most complex supply chain risk problems for our global customers,” said Arshad Matin, CEO of Avetta. “We made the investment in Avetta several years ago to build a modern, secure and global SaaS platform. This foundation has enabled us to leverage innovations like generative AI to meet our customers’ current and future needs.”

Sign up for the free insideBIGDATA newsletter.

Join us on Twitter: https://twitter.com/InsideBigData1

Join us on LinkedIn: https://www.linkedin.com/company/insidebigdata/

Join us on Facebook: https://www.facebook.com/insideBIGDATANOW