Customers are increasingly turning to product reviews to make informed decisions in their shopping journey, whether they’re purchasing everyday items like a kitchen towel or making major purchases like buying a car. These reviews have transformed into an essential source of information, enabling shoppers to access the opinions and experiences of other customers. As a result, product reviews have become a crucial aspect of any store, offering valuable feedback and insights to help inform purchase decisions.

Amazon has one of the largest stores with hundreds of millions of items available. In 2022, 125 million customers contributed nearly 1.5 billion reviews and ratings to Amazon stores, making online reviews at Amazon a solid source of feedback for customers. At the scale of product reviews submitted every month, it is essential to verify that these reviews align with Amazon Community Guidelines regarding acceptable language, words, videos, and images. This practice is in place to guarantee customers receive accurate information regarding the product, and to prevent reviews from including inappropriate language, offensive imagery, or any type of hate speech directed towards individuals or communities. By enforcing these guidelines, Amazon can maintain a safe and inclusive environment for all customers.

Content moderation automation allows Amazon to scale the process while keeping high accuracy. It’s a complex problem space with unique challenges and requiring different techniques for text, images, and videos. Images are a relevant component of product reviews, often providing a more immediate impact on customers than text. With Amazon Rekognition Content Moderation, Amazon is able to automatically detect harmful images in product reviews with higher accuracy, reducing reliance on human reviewers to moderate such content. Rekognition Content Moderation has helped to improve the well-being of human moderators and achieve significant cost savings.

Moderation with self-hosted ML models

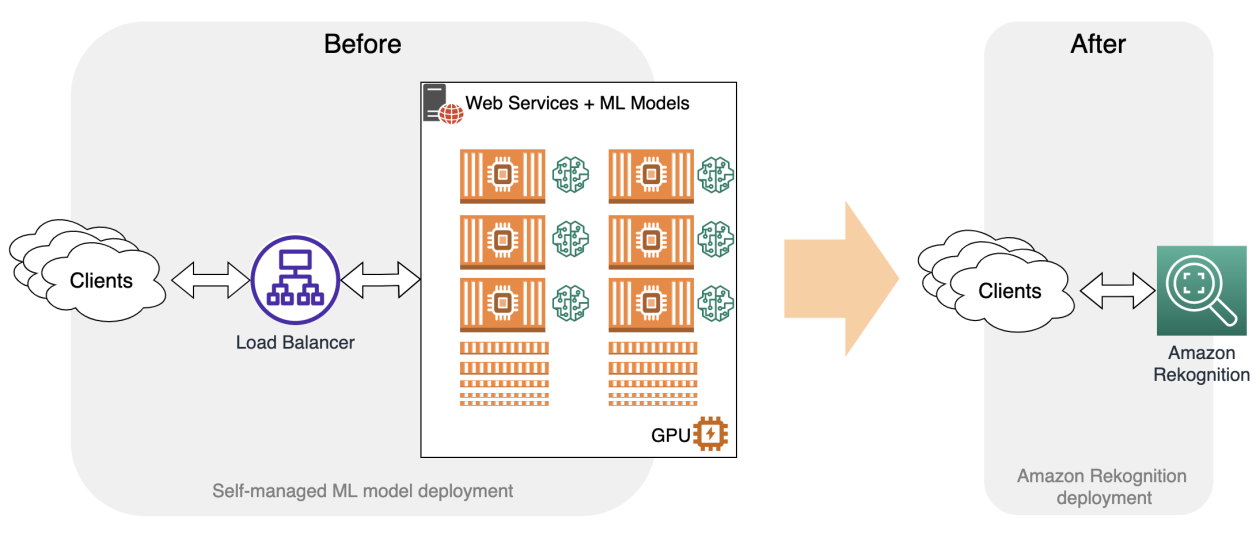

The Amazon Shopping team designed and implemented a moderation system that uses machine learning (ML) in conjunction with human-in-the-loop (HITL) review to ensure product reviews are about the customer experience with the product and don’t contain inappropriate or harmful content as per the community guidelines. The image moderation subsystem, as illustrated in the following diagram, utilized multiple self-hosted and self-trained computer vision models to detect images that violate Amazon guidelines. The decision handler determines the moderation action and provides reasons for its decision based on the ML models’ output, thereby deciding whether the image required a further review by a human moderator or could be automatically approved or rejected.

With these self-hosted ML models, the team started by automating decisions on 40{29fe85292aceb8cf4c6c5bf484e3bcf0e26120073821381a5855b08e43d3ac09} of the images received as part of the reviews and continuously worked on improving the solution through the years while facing several challenges:

- Ongoing efforts to improve automation rate – The team desired to improve the accuracy of ML algorithms, aiming to increase the automation rate. This requires continuous investments in data labeling, data science, and MLOps for models training and deployment.

- System complexity – The architecture complexity requires investments in MLOps to ensure the ML inference process scales efficiently to meet the growing content submission traffic.

Replace self-hosted ML models with the Rekognition Content Moderation API

Amazon Rekognition is a managed artificial intelligence (AI) service that offers pre-trained models through an API interface for image and video moderation. It has been widely adopted by industries such as ecommerce, social media, gaming, online dating apps, and others to moderate user-generated content (UGC). This includes a range of content types, such as product reviews, user profiles, and social media post moderation.

Rekognition Content Moderation automates and streamlines image and video moderation workflows without requiring ML experience. Amazon Rekognition customers can process millions of images and videos, efficiently detecting inappropriate or unwanted content, with fully managed APIs and customizable moderation rules to keep users safe and the business compliant.

The team successfully migrated a subset of self-managed ML models in the image moderation system for nudity and not safe for work (NSFW) content detection to the Amazon Rekognition Detect Moderation API, taking advantage of the highly accurate and comprehensive pre-trained moderation models. With the high accuracy of Amazon Rekognition, the team has been able to automate more decisions, save costs, and simplify their system architecture.

Improved accuracy and expanded moderation categories

The implementation of the Amazon Rekognition image moderation API has resulted in higher accuracy for detection of inappropriate content. This implies that an additional approximate of 1 million images per year will be automatically moderated without the need for any human review.

Operational excellence

The Amazon Shopping team was able to simplify the system architecture, reducing the operational effort required to manage and maintain the system. This approach has saved them months of DevOps effort per year, which means they can now allocate their time to developing innovative features instead of spending it on operational tasks.

Cost reduction

The high accuracy from Rekognition Content Moderation has enabled the team to send fewer images for human review, including potentially inappropriate content. This has reduced the cost associated with human moderation and allowed moderators to focus their efforts on more high-value business tasks. Combined with the DevOps efficiency gains, the Amazon Shopping team achieved significant cost savings.

Conclusion

Migrating from self-hosted ML models to the Amazon Rekognition Moderation API for product review moderation can provide many benefits for businesses, including significant cost savings. By automating the moderation process, online stores can quickly and accurately moderate large volumes of product reviews, improving the customer experience by ensuring that inappropriate or spam content is quickly removed. Additionally, by using a managed service like the Amazon Rekognition Moderation API, companies can reduce the time and resources needed to develop and maintain their own models, which can be especially useful for businesses with limited technical resources. The API’s flexibility also allows online stores to customize their moderation rules and thresholds to fit their specific needs.

Learn more about content moderation on AWS and our content moderation ML use cases. Take the first step towards streamlining your content moderation operations with AWS.

About the Authors

Shipra Kanoria is a Principal Product Manager at AWS. She is passionate about helping customers solve their most complex problems with the power of machine learning and artificial intelligence. Before joining AWS, Shipra spent over 4 years at Amazon Alexa, where she launched many productivity-related features on the Alexa voice assistant.

Shipra Kanoria is a Principal Product Manager at AWS. She is passionate about helping customers solve their most complex problems with the power of machine learning and artificial intelligence. Before joining AWS, Shipra spent over 4 years at Amazon Alexa, where she launched many productivity-related features on the Alexa voice assistant.

Luca Agostino Rubino is a Principal Software Engineer in the Amazon Shopping team. He works on Community features like Customer Reviews and Q&As, focusing through the years on Content Moderation and on scaling and automation of Machine Learning solutions.

Luca Agostino Rubino is a Principal Software Engineer in the Amazon Shopping team. He works on Community features like Customer Reviews and Q&As, focusing through the years on Content Moderation and on scaling and automation of Machine Learning solutions.

Lana Zhang is a Senior Solutions Architect at AWS WWSO AI Services team, specializing in AI and ML for Content Moderation, Computer Vision, Natural Language Processing and Generative AI. With her expertise, she is dedicated to promoting AWS AI/ML solutions and assisting customers in transforming their business solutions across diverse industries, including social media, gaming, e-commerce, media, advertising & marketing.

Lana Zhang is a Senior Solutions Architect at AWS WWSO AI Services team, specializing in AI and ML for Content Moderation, Computer Vision, Natural Language Processing and Generative AI. With her expertise, she is dedicated to promoting AWS AI/ML solutions and assisting customers in transforming their business solutions across diverse industries, including social media, gaming, e-commerce, media, advertising & marketing.